Realtime Motion Segmentation based Multibody Visual SLAMAbhijit Kundu RRC, IIIT Hyderabad 500032,...

Transcript of Realtime Motion Segmentation based Multibody Visual SLAMAbhijit Kundu RRC, IIIT Hyderabad 500032,...

-

Realtime Motion Segmentation basedMultibody Visual SLAM

Abhijit Kundu∗

RRC, IIITHyderabad 500032, India

K. Madhava KrishnaRRC, IIIT

Hyderabad 500032, [email protected]

C. V. JawaharCVIT, IIIT

Hyderabad 500032, [email protected]

ABSTRACTIn this paper, we present a practical vision based Simultane-ous Localization and Mapping (SLAM) system for a highlydynamic environment. We adopt a multibody Structurefrom Motion (SfM) approach, which is the generalization ofclassical SfM to dynamic scenes with multiple rigidly mov-ing objects. The proposed framework of multibody visualSLAM allows choosing between full 3D reconstruction orsimply tracking of the moving objects, which adds flexibil-ity to the system, for scenes containing non-rigid objects orobjects having insufficient features for reconstruction. Thesolution demands a motion segmentation framework thatcan segment feature points belonging to different motionsand maintain the segmentation with time. We propose a re-altime incremental motion segmentation algorithm for thispurpose. The motion segmentation is robust and is capa-ble of segmenting difficult degenerate motions, where themoving objects is followed by a moving camera in the samedirection. This robustness is attributed to the use of ef-ficient geometric constraints and a probability frameworkwhich propagates the uncertainty in the system. The mo-tion segmentation module is tightly coupled with featuretracking and visual SLAM, by exploring various feed-backsin between these modules. The integrated system can si-multaneously perform realtime visual SLAM and trackingof multiple moving objects using only a single monocularcamera.

1. INTRODUCTIONBoth SfM from computer vision and the SLAM in mobile

robotics research does the same job of estimating sensor mo-tion and structure of an unknown static environment. Themotivation behind vision based SLAM, is to estimate the3D scene structure and camera motion from an image se-quence in realtime so as to help guide robots. Vision basedSLAM [3, 11, 15, 17] and SfM systems [8] have been the

∗Corresponding author

Permission to make digital or hard copies of all or part of this work forpersonal or classroom use is granted without fee provided that copies arenot made or distributed for profit or commercial advantage and that copiesbear this notice and the full citation on the first page. To copy otherwise, torepublish, to post on servers or to redistribute to lists, requires prior specificpermission and/or a fee.ICVGIP ’10, December 12-15, 2010, Chennai, IndiaCopyright 2010 ACM 978-1-4503-0060-5/10/12 ...$10.00.

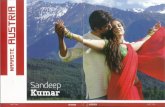

Figure 1: An Illustration of our system. Here thestatic background is being reconstructed, while themoving persons are being detected and tracked

subject of much investigation and research. But almost allthese approaches assume a static environment, containingonly rigid, non-moving objects. Moving objects are treatedthe same way as outliers and filtered out using robust statis-tics like RANSAC [5]. Though this may be a feasible solu-tion in less dynamic environments, but it soon fails as theenvironment becomes more and more dynamic. Also ac-counting for both the static and moving objects providesricher information about the environment. A robust solu-tion to the SLAM problem in dynamic environments willexpand the potential for robotic applications, especially inapplications which are in close proximity to human beingsand other robots. As put by [28], robots will be able to worknot only for people but also with people.

The solution to the moving object detection and segmen-tation problem will act as a bridge between the static SLAMor SfM and its counterpart for dynamic environments. But,motion detection from a freely moving monocular camera isan ill-posed problem and a difficult task. The moving cam-era causes every pixel to appear moving. The apparent pixelmotion of points is a combined effect of the camera motion,independent object motion, scene structure and camera per-spective effects. Different views resulting from the cameramotion are connected by a number of multiview geometricconstraints. These constraints can be used for the motiondetection task. Those inconsistent with the constraints canbe labeled as moving or outliers.

The last decade saw lot of developments in the “multi-body” extension [20, 21, 23, 27] to multi-view geometry.These methods are the natural generalization of the clas-sical structure from motion theory [4, 8] to the challenging

-

case of dynamic scenes involving multiple rigid-body mo-tions. Thus given a set of feature trajectories belonging todifferent independently moving bodies, multibody SfM esti-mates the number of moving objects in the scene, cluster thetrajectories on basis of motion, and then estimate the modelas in relative camera pose and 3D structure with respect toeach body/object. Thus it refers to the problem of fittingmultiple motion models to the scene, given a set of imagefeature trajectories.

By multibody visual SLAM, we indicate a realtime versionof the multibody SfM. The purpose of the multibody visualSLAM is to extract as much information from the environ-ment as possible, even those belonging to moving objects.We have taken a more practical point of view, where wechoose not to reconstruct all the moving objects. This deci-sion is motivated by the observation that foreground objectsare generally small and may move rapidly and non-rigidly,which makes them very difficult for full 3D reconstruction.Moreover certain applications may just need to know thepresence of moving objects, rather than its full 3D structure.The proposed framework offers the flexibility of choosing theobjects that needs to be reconstructed. Objects, not chosenfor reconstruction are simply tracked. Fig. 1 illustrates sucha system, where the static background is chosen for recon-struction, and objects moving independently are detectedand tracked over views.

The solution needs an incremental motion segmentationframework which can segment feature points belonging todifferent motions and maintain the segmentation with time.With every new frame it needs to verify the existing seg-mentation, and associate new features to one of the movingobjects. We propose a realtime incremental motion segmen-tation algorithm for aiding multibody visual SLAM. Themotion segmentation is robust and is capable of segmentingdifficult degenerate motions, where the moving objects is fol-lowed by a moving camera in the same direction. Efficientgeometric constraints are used in detecting these degener-ate motions. We introduce a probability framework thatrecursively updates feature probability and takes into con-sideration the uncertainty in camera pose estimation. Thefinal system integrates feature tracking, motion segmenta-tion and 3D reconstruction by visual SLAM. We introduceseveral feedback paths among these modules, which enablesthem to mutually benefit each other. The integrated systemallows simultaneous online 3D reconstruction and trackingof multiple moving objects using only a single monocularcamera. A full perspective camera model is used, and we donot have any restrictive assumptions on the camera motionor environment. Unlike many of the existing works, the pro-posed method is online and incremental in nature and scalesto arbitrarily long sequences.

In this paper, we explore in detail the motion segmenta-tion module (Sec. 5) and its interplay with the other modulesof feature tracking (Sec. 4) and visual SLAM (Sec. 6). Re-sults of the proposed system are shown in Sec. 7 for scenesinvolving degenerate motions and varying number of mov-ing objects on different datasets. Before that the previousworks are detailed in Sec. 2 and Sec. 3 gives a gist overviewof the whole system.

2. RELATED WORKSThe task of moving object detection and segmentation,

is much easier if a stereo sensor is available, which allows

additional constraints to be used for detecting independentmotion [1, 2]. However the problem is very much ill-posedfor monocular systems. In realtime monocular visual SLAMsystems, moving objects have not yet been dealt properly.In our literature survey, we have only found three works onvisual SLAM in dynamic environments: a work by Sola [26]and two other recent works of [30] and [13]. Sola [26] does anobservability analysis of detecting and tracking moving ob-jects with monocular vision. He proposes a BiCamSLAM [26]solution with stereo cameras to bypass the observability is-sues with mono-vision.

In [30], a 3D object tracker runs parallel with the monoc-ular camera SLAM [3] for tracking a predefined moving ob-ject. This prevents the visual SLAM framework from incor-porating moving features lying on that moving object. Butthe proposed approach does not perform moving object de-tection; so moving features apart from those lying on thetracked moving object can still corrupt the SLAM estima-tion. Also, they used a model based tracker, which can onlytrack a previously modeled object with manual initializa-tion.

The work by Migliore et al. [13] maintains two separatefilters: a monoSLAM filter [3] with the static features and abearing only tracker for the moving features. As concludedby Migliore et al. [13], the main disadvantage of their sys-tem is the inability to obtain an accurate estimate of themoving objects in the scene. This is due to the fact thatthey maintain separate filters for tracking each individualmoving feature, without any analysis of the structure of thescene; which for e.g., can be obtained from clustering pointsbelonging to same moving object or performing same mo-tion. This is also the reason that they are not able to usethe occlusion information of the tracked moving object, forextending the lifetime of features as in [30].

The problem of motion detection and segmentation froma moving camera has been a very active research area incomputer vision community. The multiview geometric con-straints used for motion detection, can be loosely dividedinto four categories. The first category of methods used forthe task of motion detection, relies on estimating a globalparametric motion model of the background. These meth-ods [10, 19, 29] compensate camera motion by 2D homog-raphy or affine motion model and pixels consistent with theestimated model are assumed to be background and out-liers to the model are defined as moving regions. However,these models are approximations which hold only for certainrestricted cases of camera motion and scene structure.

The problems with 2D homography methods led to plane-parallax [9, 22, 31] based constraints. The “planar-parallax”constraints represents the scene structure by a residual dis-placement field termed parallax with respect to a 3D refer-ence plane in the scene. The plane-parallax constraint wasdesigned to detect residual motion as an after-step of 2Dhomography methods. They are designed to detect motionregions when dense correspondences between small baselinecamera motions are available. Also, all the planar-parallaxmethods are ineffective when the scene cannot be approxi-mated by a plane.

Though the planar-parallax decomposition can be used foregomotion estimation and structure, the traditional multi-view geometry constrains like epipolar constraint in 2 viewsor trillinear constraints in 3 views and their extension toN views have proved to be much more effective in scene

-

understanding as in SfM or visual SLAM. This constraintsare well understood and are now textbook materials [4, 8].

Most of the multibody motion segmentation research [20,21, 23, 24, 27] has focused on theoretical and mathemat-ical aspects of the problem. They have only been exper-imented on very short sequences, with either zero or veryless outliers and noise-free feature trajectories. Also the highcomputation cost, frequent non-convergence of the solutionsand highly demanding assumptions; all have prevented themfrom being applied to real-world sequences. Only recentlyOzden et al. [18] discussed some of the practical issues, thatcomes up in multibody SfM. Though recent methods [6, 21]are more robust to outliers and noise, we are still far fromdoing multibody structure from motion in realtime.

3. OVERVIEWThe feature tracking module tracks existing feature points,

while new features are instantiated. The purpose of the mo-tion segmentation module is to segment these feature tracksbelonging to different motion bodies, and to maintain thissegmentation as new frames arrives. In the initializationstep, an algebraic multibody motion segmentation algorithmis used to segment the scene into multiple rigidly movingobjects. A decision is made as to which objects will beundergoing the full 3D structure and camera motion esti-mation. The background object is always chosen to undergothe full 3D reconstruction and camera motion estimationprocess. Other objects may either undergo full SfM estima-tion or just simply tracked, depending on the suitability forSfM estimation or application demand. On the objects, cho-sen for reconstruction, the standard monocular visual SLAMpipeline is used to obtain the 3D structure and camera poserelative to that object. For these objects, we compute aprobabilistic likelihood that a feature is moving along ormoving independently of that object. These probabilitiesare recursively updated as the features are tracked. Alsothe probabilities take care of uncertainty in pose estimationby the visual SLAM module. Features with less likelihood offitting one model are either mismatched features arising dueto tracking error or features belonging to either some otherreconstructed object or one of the unmodeled independentlymoving objects. For the unmodeled moving objects, we usespatial proximity and motion coherence to cluster the resid-ual feature tracks into independently moving entities.

The individual modules of feature tracking, motion seg-mentation and visual SLAM are tightly coupled and variousfeedback paths in between them are explored, which bene-fits each other. The motion model of a reconstructed objectestimated from the visual SLAM module helps in improvingthe feature tracking. Relative camera pose estimates fromSLAM are used by motion segmentation module to com-pute probabilistic model-fitness. The uncertainty in camerapose estimate is also propagated into this computation, soas to yield robust model-fitness scores. The computationof the 3D structure also helps in setting a tighter bound inthe geometric constraints, which results in more accurateindependent motion detection. Finally the results from themotion segmentation are fed back to the visual SLAM mod-ule. The motion segmentation prevents independent motionfrom corrupting the structure and motion estimation by thevisual SLAM module. This also ensures a less number ofoutliers in the reconstruction process of a particular object.So we need less number of RANSAC iterations [5] thus re-

sulting in improved speed in the visual SLAM module.

4. FEATURE TRACKINGFeature tracking is an important sub-module that needs to

be improved for multibody visual SLAM to take place. Con-trary to conventional SLAM, where the features belongingto moving objects are not important, we need to pay extracaution to feature tracking for multibody SLAM. For multi-body visual SLAM to take place, we should be able to getfeature tracks on the moving bodies also. This is challeng-ing as different bodies are moving at different speeds. Also3D reconstruction is only possible, when there are sufficientfeature tracks of a particular body.

In each image, a number of salient features (FAST cor-ners) are detected at different image pyramidal levels. Con-trary to conventional visual SLAM, new features are addedalmost every frame. However only a subset of these, de-tected on certain keyframes are made into 3D points. Theextra set of tracks helps in detecting independent motion. Apatch is generated on these feature locations and is matchedacross images on the basis of zero-mean SSD scores to pro-duce feature tracks. A number of constraints are used toimprove the feature matching:

a) Adaptive Search Window: Between a pair of im-age, features are matched within a fixed distance (window)from its location in one image. The size and shape of thiswindow is decided adaptively, based on the past motion ofthat particular body. For 3D points, whose depth has beencomputed from the vSLAM module, the 1D epipolar searchis reduced to just around the projection of the 3D point onthe image with predicted camera pose.

b) Warp matrix for patch: An affine warp is performedon the image patches to maintain view invariance from thepatch’s first and current observation. If the depth of a patchis unknown, only a rotation warp is made. For the imagepatch of the 3D points, which have been triangulated, a fullaffine warp is performed. This process is exactly same asthe patch search procedure in Klein et al. [11].

c) Occlusion Constraint: Motion segmentation givesrough occlusion information, i.e. it says whether some fore-ground moving object is occluding some other body. Thisinformation helps in data association, particularly for fea-tures belonging to a background body, which are predictedto lie inside the convex hull created from the feature pointsof a foreground moving object. These occluded features arenot associated, and are kept until they emerge out from oc-clusion.

d) Backward Match and Unicity Constraint: Whena match is found, we try to match that feature backwardin the original image. Matches, in which each point is theother’s strongest match is kept. Enforcing unicity constraintamounts to keeping only the single strongest, out of severalmatches for a single feature in the other image.

5. MOTION SEGMENTATIONThe input to the motion segmentation framework is fea-

ture tracks from feature tracking module, the camera rela-tive motion in reference to each reconstructed body from thevisual SLAM module, and the previous segmentation. Thetask of the motion segmentation module is that of modelselection so as to assign these feature tracks to one of thereconstructed bodies or some unmodeled independent mo-

-

tion. Efficient geometric constraints are used to form a prob-abilistic fitness score for each reconstructed object. Witheach new frame, existing features are tested for model-fitnessand unexplained features are assigned to one of the inde-pendently moving object. But before all this, we shouldinitialize the motion segmentation, which is described next.

5.1 Initialization of Motion SegmentationThe initialization routine for motion segmentation and vi-

sual SLAM is somewhat different from rest of the algorithm.We make use of an algebraic two-view multibody motion seg-mentation algorithm of RAS [21] to segment the input setof feature trajectories into multiple moving objects. Thereasons behind the choice of [21] among other algorithms isits direct non-iterative nature and faster computation time.This segmentation provides the system, the choice of mo-tion bodies for reconstruction. For the segment chosen forreconstruction, an initial 3D structure and camera motion iscomputed via epipolar geometry estimation as part of static-scene visual SLAM initialization routine.

5.2 Geometric ConstraintsBetween any two frames, the camera motion with respect

to the reconstructed body is obtained from the visual SLAMmodule. The geometric constraints are then estimated to de-tect independent motion with respect to the reconstructedbody. So for the static background, all moving objectsshould be detected as independent motion. Epipolar con-straint is the commonly used constraint that connects twoviews. Reprojection error or its first order approximationcalled Sampson error, based on the epipolar constraint isused throughout the structure and motion estimation by thevisual SLAM module. Basically they measure how far a fea-ture lies from the epipolar line induced by the correspondingfeature in the other view. Though these are the gold stan-dard cost functions for 3D reconstruction, it is not goodenough for independent motion detection. If a 3D pointmoves along the epipolar plane formed by the two views, itsprojection in the image move along the epipolar line. Thusin spite of moving independently, it still satisfies the epipolarconstraint. This is depicted in Fig. 2. This kind of degen-erate motion is quite common in real world scenarios, e.g.camera and an object are moving in same direction as incamera mounted in car moving through a road, or camera-mounted robot following behind a moving person. To detectdegenerate motion, we make use of the knowledge of cameramotion and 3D structure to estimate a bound in the positionof the feature along the epipolar line. We describe this asFlow Vector Bound (FVB) constraint.

5.2.1 Flow Vector Bound (FVB) Constraint:For a general camera motion involving both rotation and

translation R, t, the effect of rotation can be compensated byapplying a projective transformation to the first image. Thisis achieved by multiplying feature points in view1 with theinfinite homography H = KRK−1 [8]. The resulting featureflow vector connecting feature position in view2 to that ofthe rotation compensated feature position in view1, shouldlie along the epipolar lines. Now assume that our cameratranslates by t and pn, pn+1 be the image of a static pointX. Here pn is normalized as pn = (u, v, 1)

T . Attaching theworld frame to the camera center of the 1st view, the cameramatrix for the views are K[I|0] and K[I|t]. Also, if z is depth

Figure 2: Left: The world point P moves non-

degenerately to P′

and hence x′, the image of P

′

does not lie on the epipolar line corresponding tox. Right: The point P moves degenerately in the

epipolar plane to P′. Hence, despite moving, its im-

age point lies on the epipolar line corresponding tothe image of P.

of the scene point X, then inhomogeneous coordinates ofX is zK−1pn. Now image of X in the 2nd view, pn+1 =K[I|t]X. Solving we get, [8]

pn+1 = pn +Kt

z(1)

Equation 1 describes the movement of the feature pointin the image. Starting at point pn in In it moves alongthe line defined by pn and epipole, en+1 = Kt. The extentof movement depends on translation t and inverse depth z.From equation 1, if we know depth z of a scene point, we canpredict the position of its image along the epipolar line. Inabsence of any depth information, we set a possible bound indepth of a scene point as viewed from the camera. Let zmaxand zmin be the upper and lower bound on possible depthof a scene point. We then find image displacements alongthe epipolar line, dmin and dmax, corresponding to zmax andzmin respectively. If the flow vector of a feature, does notlie between dmin and dmax, it is more likely to be an imageof an independent motion.

The structure estimation from visual SLAM module helpsin reducing the possible bound in depth. Instead of settingzmax to infinity, known depth of the background enables insetting a more tight bound, and thus better detection ofdegenerate motion. The depth bound is adjusted on thebasis of depth distribution along the particular frustum.

The probability of satisfying flow vector bound constraintP (FV B) can be computed as

P (FV B) =1

1 +

„FV − dmean

drange

«2β (2)Here dmean =

dmin + dmax2

and drange =dmax − dmin

2,

where dmin and dmax are the bound in image displacements.The distribution function is similar to a Butterworth band-pass filter. P (FV B) has a high value if the feature lies insidethe bound given by FVB constraint, and the probability fallsrapidly as the feature moves away from the bound. Largerthe value of β, more rapidly it falls. In our implementation,we use β = 10.

5.3 Independent Motion ProbabilityIn this section we describe a recursive formulation based

on Bayes filter to derive the probability of a projected im-age point of a world point being classified as stationaryor dynamic. The relative pose estimation noise and image

-

pixel noise are bundled into a Gaussian probability distri-bution of the epipolar lines as derived in [8] and denoted byELi = N (µli,

Pli), where ELi refers to the set of epipolar

lines corresponding to image point i, and N (µli,P

li) refers

to the standard Gaussian probability distribution over thisset.

Let pni be the ith point in image In. The probability that

pni is classified as stationary is denoted as P (pn

i|In, In−1) =Pn,s(p

i) or Pn,si in short, where the suffix s signifying static.

Then with Markov approximation, the recursive probabilityupdate of a point being stationary given a set of images canbe derived as

P (pni|In+1, In, In−1) = ηsiPn+1,siPn,si (3)

Here ηsi is normalization constant that ensures the proba-

bilities sum to one.The term Pn,s

i can be modeled to incorporate the dis-tribution of the epipolar lines ELi. Given an image pointpn−1

i in In−1 and its corresponding point pni in In then

the epipolar line that passes through pni is determined as

lni = en × pni. The probability distribution of the feature

point being stationary or moving due to epipolar constraintis defines as

PEP,si =

1p2π|Σl|

exp(−12

(lni − µni)τΣ−1l (ln

i − µni)) (4)

However this does not take into account the misclassificationarising due to degenerate motion explained in previous sec-tions. To overcome this, the eventual probability is fused asa combination of epipolar and flow vector bound constraints:

Pn,si = α · PEP,si + (1− α) · PFV B,si (5)

where, α balances the weight of each constraint. A χ2 test isperformed to detect if the epipolar line ln

i due to the imagepoint is satisfying the epipolar constraint. When Epipolarconstraint is not satisfied, α takes a value close to 1 ren-dering the FVB probability inconsequential. As the epipo-lar line ln

i begins indicating a strong likelihood of satisfyingepipolar constraint, the role of FVB constraint is given moreimportance, which can help detect the degenerate cases.

An analogous set of equations characterize the probabil-ity of an image point being dynamic, which are not delin-eated here due to brevity of space. In our implementation,the envelope of epipolar lines [8] is generated by a set of Fmatrices distributed around the mean R, t transformationbetween two frames as estimated by visual SLAM module.Hence a set of epipolar lines corresponding to those ma-trices are generated and characterized by the sample set,

ELssi =

“l̂1i, l̂2

i.......l̂qi”

and the associated probability set,

PEL =“wl̂1

i, wl̂2i.......wl̂q

i”

where each wl̂ji is the probabil-

ity of that line belonging to the sample set ELssi computed

through usual Gaussian procedures. Then the probabilitythat an image point pn

i is static is given by:

Pn,si =

qXj=1

αj ·PEP,l̂jiS ·pni+(1−αj) ·PFV B,l̂ji

S ·pni ·wl̂ji

(6)where, PEP,l̂ji

S and PFV B,l̂jiS are the probabilities of the

point being stationary due to the respective constraints withrespect to the epipolar line l̂j

i.

5.4 Clustering Unmodeled MotionsFeatures with high probabilities of being dynamic are ei-

ther outliers or belongs to potential moving objects. Sincethese objects are often small, and highly dynamic, they arevery hard to be reconstructed. So instead we adopt a sim-ple move-in-unison model for them. Spatial proximity andmotion coherence is used to cluster these feature tracks intoindependently moving entities. By motion coherence, weuse the heuristic that the variance in the distance betweenfeatures belonging to same object should change slowly incomparison.

6. VISUAL SLAM FRAMEWORKThe monocular visual SLAM framework is that of a stan-

dard bundle adjustment visual SLAM [11, 14, 17]. On theobjects chosen for reconstruction, a 5-point algorithm [16]with RANSAC is used to estimate the initial epipolar ge-ometry, and subsequent pose is determined with 3-point re-section [7]. Some of the frames are selected as keyframes,which are used to triangulate 3D points. The set of 3Dpoints and the corresponding keyframes are then used bythe bundle adjustment process to iteratively minimize repro-jection error. The bundle adjustment is initially performedover the most recent keyframes, before attempting a globaloptimization. Our implementation closely follows to thatof [11, 14]. The system is implemented as multi-threadedprocesses. While one thread performs tasks like camera poseestimation, keyframe decision and addition, another back-end thread optimizes this estimate by bundle adjustment.

6.1 Feedback from Motion SegmentationHowever the main difference with the existing SLAM meth-

ods, is its interplay with the motion segmentation module.The motion segmentation prevents independent motion fromentering the SfM computation, which could have otherwiseresulted in incorrect initial SfM estimate and lead the bun-dle adjustment to converge to local minima. The feedbackresults in less number of outliers in the SfM process of a par-ticular object. Thus the SfM estimate is more well condi-tioned and less number of RANSAC iterations is needed [5].Apart from improvement in the camera motion estimate,the knowledge of the independent foreground objects com-ing from motion segmentation helps in the data associationof the features, which are currently being occluded by thatobject. For the foreground independent motions, we forma convex-hull around the tracked points clustered as an in-dependently moving entity. Existing 3D points lying insidethis region is marked as not visible and is not searched fora match. This prevents 3D features from unnecessary dele-tion and re-initialization, just because it was occluded by anindependent motion for some time.

7. EXPERIMENTAL RESULTSThe system has been tested on a number of real image

datasets, with various number and type of moving enti-ties. Details of the image sequences used for experimentsare listed in Table. 1.

7.1 Moving Box SequenceThis is same sequence as used in [30]. A previously static

box is being moved in front of the camera which is also mov-ing arbitrarily. However unlike [30], our method does not

-

Figure 3: Results from the Moving Box Sequence

uses any 3D model, and thus can work for any previouslyunseen object. As shown in Fig. 3 our algorithm reliablydetects the moving object just on the basis of motion con-straints. The difficulty with this sequence is that the fore-ground moving box is nearly white and thus provides veryless features. This sequence also highlights the detection ofpreviously static moving objects. Upon detection, 3D mappoints lying on the moving box are deleted. The convexhull formed on the moving box is shown in red shade. Thisdefines the occlusion mask, and corresponding actions aretaken as described in Sec. 6.1. Left image of Fig. 5 showsthe epipolar errors for an instance from this sequence.

Figure 4: Results from the New College Sequence.

7.2 New College SequenceWe tested our results on some dynamic parts of the New

College dataset [25]. Only left of the stereo image pairshas been used. In this sequence, the camera moves alonga roughly circular campus path, and three moving personspasses by the scene. Left image in Fig. 6 shows the aerialsnap of the environment and the camera trajectory. Yellowdenotes the part of the trajectory, when there is no inde-pendently moving body other than the static background.Green, red and blue denotes the part of trajectory where1st, 2nd and the 3rd “moving” persons were detected. Partof the trajectory colored black denotes the time when both2nd and 3rd moving persons are visible. Fig. 6 also showsa snap of the online map of the static background, recon-structed by the Visual SLAM framework. Fig. 4 depictsthe motion segmentation results for this sequence. Fig. 5shows an example of degenerate motion detection, as theflow vectors on the moving person almost move along epipo-lar lines, but they are being detected due to usage the FVBconstraint. This result verifies system’s performance for ar-bitrary camera trajectory, degenerate motion detection andchanging number of moving entities.

7.3 Indoor Lab SequenceThis is an indoor sequence taken from an inexpensive

hand-held camera. As the camera moves around, movingpersons enter and leave the scene. Fig. 7 shows the resultsfor this sequence. The bottom right picture in Fig. 7 showshow two spatially close independent motions is clustered cor-rectly by the algorithm. This sequence also involves a lot ofdegenerate motion as the camera and the persons move insame direction. The 3D structure estimation of the back-ground helps in setting a tighter bound in the FVB con-straint. The depth bound is adjusted on the basis of depthdistribution of the reconstructed background along the par-ticular frustum, as explained in Sec. 5.2.1.

7.4 System DetailsThe system is implemented as threaded processes in C++.

The open source libraries of TooN, OpenCV and sparse bun-dle adjustment [12] were used throughout the system. Therun-time of the algorithm depends on lot of factors. Themost significant of them are the number of bodies beingreconstructed, total number of independent motions in thescene, image resolution and bundle adjustment rules. Thesystem runs in realtime at the average of 25Hz in a standardlaptop, when a single body is chosen for reconstruction. Themotion segmentation module takes around 10ms for each im-age of 512x284 resolution and with 3 independently movingbodies.

7.5 DiscussionThe results verifies that the integrated system can simul-

taneously perform 3D reconstruction, camera pose estima-tion, and tracking of multiple moving objects using only asingle monocular camera, while maintaining realtime per-formance as listed in Table 1. Also the algorithm is online(casual) in nature as opposed to batch operation prevalentin multibody SfM literature. The proposed approach alsoscales to long sequences. We have shown results for de-generate motion (Fig. 5), arbitrary camera trajectory andchanging number of moving entities. In Fig. 6, we demon-strated the 3D reconstruction and camera pose estimation

-

Figure 5: Epipolar lines in Grey, flow vectors after rotation compensation is shown in orange. Cyan linesshow the distance to epipolar line. Features detected as independently moving are shown as red dots. Notethe near-degenerate independent motion in the middle and right image. However the use of FVB constraintenables efficient detection of degenerate motion.

Figure 6: LEFT: Aerial map and camera trajectory. Non Yellow denotes part of the trajectory where amoving person is being detected. RIGHT: The online map being of the background, 3D structure points arein green, while white line is the camera trajectory, and the blue dots are the key-frame positions.

in reference to the static background. 3D structure pointsare in green, while white line is the camera trajectory, andthe blue dots are the keyframe positions with respect to thebackground. The camera trajectory is also highlighted onthe aerial map of the test environment.

Figure 7: Results from the Indoor Lab Sequence

Table 1: Details of the datasetsDataset Resolution Length RuntimeMoving Box 320x240 718 images 30HzNew College 512x384 1500 images 18HzIndoor Lab 640x480 1720 images 22Hz

8. CONCLUSIONSThis paper presents a realtime incremental motion seg-

mentation algorithm that enables a practical multibody vi-sual SLAM algorithm. The framework segments featurepoints belonging to different motions and maintain this seg-mentation with time. Multiview geometric constraints wereexplored to successfully detect various independent motionincluding degenerate motions. A probabilistic frameworkin the model of a recursive Bayes filter is developed thatassigns probability to a feature being stationary or mov-ing based on geometric constraints. Uncertainty in camerapose estimation is also propagated into this probability es-timation. The different modules of motion segmentation,feature tracking and visual SLAM were integrated and wepresented, how each module helps the other one. The in-tegrated system can simultaneously perform realtime visualSLAM, and tracking of multiple moving objects using onlya single monocular camera. Experiments on various real im-

-

age sequences shows the efficacy of the method. The workpresented here can find immediate applications in variousrobotics applications involving dynamic scenes.

9. REFERENCES[1] M. Agrawal, K. Konolige, and L. Iocchi. Real-time

detection of independent motion using stereo. In IEEEWorkshop on Motion and Video Computing, 2005.

[2] Z. Chen and S. Birchfield. Person following with amobile robot using binocular feature-based tracking.In IEEE/RSJ International Conference on IntelligentRobots and Systems (IROS), 2007.

[3] A. Davison, I. Reid, N. Molton, and O. Stasse.MonoSLAM: Real-time single camera SLAM. IEEETransactions on Pattern Analysis and MachineIntelligence (TPAMI), 29(6):1052–1067, 2007.

[4] O. Faugeras, Q. Luong, and T. Papadopoulo. Thegeometry of multiple images. MIT press, 2001.

[5] M. Fischler and R. Bolles. Random sample consensus:A paradigm for model fitting with applications toimage analysis and automated cartography.Communications of the ACM, 24(6):381–395, 1981.

[6] C. G., S. Atev, and G. Lerman. Kernel SpectralCurvature Clustering (KSCC). In ICCV’09 Workshopon Dynamical Vision, 2009.

[7] B. Haralick, C. Lee, K. Ottenberg, and M. Nolle.Review and analysis of solutions of the three pointperspective pose estimation problem. InternationalJournal of Computer Vision, 13(3):331–356, 1994.

[8] R. I. Hartley and A. Zisserman. Multiple ViewGeometry in Computer Vision. Cambridge UniversityPress, 2nd edition, 2004.

[9] M. Irani and P. Anandan. A unified approach tomoving object detection in 2D and 3D scenes. IEEETransactions on Pattern Analysis and MachineIntelligence (TPAMI), 20(6):577–589, 1998.

[10] B. Jung and G. Sukhatme. Real-time motion trackingfrom a mobile robot. International Journal of SocialRobotics, pages 1–16.

[11] G. Klein and D. Murray. Parallel tracking andmapping for small AR workspaces. In Proc. SixthIEEE and ACM International Symposium on Mixedand Augmented Reality (ISMAR), 2007.

[12] M. A. Lourakis and A. Argyros. SBA: A SoftwarePackage for Generic Sparse Bundle Adjustment. ACMTrans. Math. Software, 36(1):1–30, 2009.

[13] D. Migliore, R. Rigamonti, D. Marzorati,M. Matteucci, and D. G. Sorrenti. Avoiding movingoutliers in visual SLAM by tracking moving objects.In ICRA’09 Workshop on Safe navigation in open anddynamic environments, 2009.

[14] E. Mouragnon, F. Dekeyser, P. Sayd, M. Lhuillier, andM. Dhome. Real time localization and 3dreconstruction. In Computer Vision and PatternRecognition (CVPR), 2006.

[15] J. Neira, A. Davison, and J. Leonard. Guest editorial,special issue in visual slam. IEEE Transactions onRobotics, 24(5):929–931, October 2008.

[16] D. Nister. An efficient solution to the five-pointrelative pose problem. IEEE Transactions on PatternAnalysis and Machine Intelligence (TPAMI),26(6):756–770, 2004.

[17] D. Nister, O. Naroditsky, and J. Bergen. Visualodometry. In Computer Vision and PatternRecognition (CVPR), 2004.

[18] K. E. Ozden, K. Schindler, and L. V. Gool. Multibodystructure-from-motion in practice. IEEE Transactionson Pattern Analysis and Machine Intelligence(TPAMI), 32:1134–1141, 2010.

[19] S. Pundlik and S. Birchfield. Motion segmentation atany speed. In Proceedings of British Machine VisionConference (BMVC), 2006.

[20] S. Rao, R. Tron, R. Vidal, and Y. Ma. Motionsegmentation via robust subspace separation in thepresence of outlying, incomplete, or corruptedtrajectories. In Computer Vision and PatternRecognition (CVPR), 2008.

[21] S. Rao, A. Yang, S. Sastry, and Y. Ma. RobustAlgebraic Segmentation of Mixed Rigid-Body andPlanar Motions from Two Views. InternationalJournal of Computer Vision (IJCV), 2010.

[22] H. Sawhney, Y. Guo, and R. Kumar. Independentmotion detection in 3D scenes. IEEE Transactions onPattern Analysis and Machine Intelligence (TPAMI),22(10):1191–1199, 2000.

[23] K. Schindler and D. Suter. Two-view multibodystructure-and-motion with outliers through modelselection. IEEE Transactions on Pattern Analysis andMachine Intelligence (TPAMI), 28(6):983–995, 2006.

[24] K. Schindler, J. U, and H. Wang. Perspective n-viewmultibody structure-and-motion through modelselection. In European Conference on ComputerVision (ECCV), 2006.

[25] M. Smith, I. Baldwin, W. Churchill, R. Paul, andP. Newman. The new college vision and laser data set.The International Journal of Robotics Research(IJRR), 28(5):595, 2009.

[26] J. Sola. Towards visual localization, mapping andmoving objects tracking by a mobile robot: a geometricand probabilistic approach. PhD thesis, LAAS,Toulouse, 2007.

[27] R. Vidal, Y. Ma, S. Soatto, and S. Sastry. Two-viewmultibody structure from motion. InternationalJournal of Computer Vision (IJCV), 68(1):7–25, 2006.

[28] C. Wang, C. Thorpe, S. Thrun, M. Hebert, andH. Durrant-Whyte. Simultaneous localization,mapping and moving object tracking. TheInternational Journal of Robotics Research (IJRR),26(9):889–916, 2007.

[29] J. Wang and E. Adelson. Layered representation formotion analysis. In Computer Vision and PatternRecognition (CVPR), 1993.

[30] S. Wangsiripitak and D. Murray. Avoiding movingoutliers in visual SLAM by tracking moving objects.In International Conference on Robotics andAutomation (ICRA), 2009.

[31] C. Yuan, G. Medioni, J. Kang, and I. Cohen.Detecting motion regions in the presence of a strongparallax from a moving camera by multiviewgeometric constraints. IEEE Transactions on PatternAnalysis and Machine Intelligence (TPAMI),29(9):1627–1641, 2007.