opus4.kobv.de · Zusammenfassung Parameterschätzung behandelt die Bestimmung des Zustands eines...

Transcript of opus4.kobv.de · Zusammenfassung Parameterschätzung behandelt die Bestimmung des Zustands eines...

Asymptotic error estimates of the 3DMUSIC algorithm in the case of systematic

errors

Asymptotische Fehlerschranken des 3D MUSICAlgorithmus im Fall von systematischen Fehlern

Der Naturwissenschaftlichen Fakultätder Friedrich-Alexander-Universität

Erlangen-Nürnberg

zur

Erlangung der Doktorgrades Dr. rer. nat.

vorgelegt von

Patrick Luff

aus Weißenburg i. Bay.

Als Dissertation genehmigt von der Naturwissen-

schaftlichen Fakultät der Friedrich-Alexander-Universität

Erlangen-Nürnberg

Tag der mündlichen Prüfung: 07.03.2013

Vorsitzende/r der Promotionskommission: Prof. Dr. Johannes Barth

Erstberichterstatter/in: Prof. Dr. Eberhard Bänsch

Zweitberichterstatter/in: Prof. Dr. Barbara Kaltenbacher

Don’t stop believin’Journey, Escape (1981)

Zusammenfassung

Parameterschätzung behandelt die Bestimmung des Zustands eines gegebenen Systemsaufgrund von indirekten Sensormessungen. Unter den vielen Methoden in Verwendungwird der Multiple Signal Classification (MUSIC) Algorithmus als einer der erfolgreichstenin mehreren Anwendungsgebieten angesehen, darunter Magnetoenzephalographie, Elek-troenzephalographie, Radar und drahtlose Kommunikation. Der Algorithmus durchsuchtdas Arbeitsvolumen mit einem Modell einer Einzelquelle und berechnet die Ähnlichkeitzwischen dem Quellenunterraum und einem genäherten Signalunterraum. Es wird dabeiangenommen, dass die Quelle räumlich statisch ist.

Die meisten praktischen Anwendungen haben mit Ungenauigkeiten des zugrunde liegen-den physikalischen Modells zu kämpfen. Diese Ungenauigkeiten beeinflussen die Param-eterschätzung und führen zu einem inkorrekten Ergebnis, sogar im Fall von rauschfreienSensoren. Diese Arbeit konzentriert sich auf die Modellierung and Quantifizierung dieserSchätzfehler im asymptotischen Fall. Dafür wird angenommen, dass die Modellfehler Zu-fallsvariablen sind und Erwartungswert und Momente höherer Ordnung des Schätzfehlerswerden genähert.Bereits bestehende Ansätze erster Ordnung werden auf potentiell zweite Ordnung er-

weitert. Zusätzlich wird dreidimensionale Parameterschätzung betrachtet. Wie sich her-ausstellt, werden im dreidimensionalen Fall Ableitungen von Eigen- und Singulärsyste-men benötigt. Für beide Systeme werden in dieser Arbeit Lösungen in geschlossenerForm angegeben. Auftretende numerische Probleme werden in einem separaten Kapitelbehandelt. Die vorgestellten numerischen Vereinfachungen machen eine Berechnung vonasymptotischen Schätzfehlern in einer praktikablen Zeitspanne überhaupt erst möglich.

Als ein untergeordneter Beitrag wird in dieser Arbeit der Fall einer sich bewegendenQuelle behandelt. Verwendet wird ein stichprobenbasierter Partikelfilter-Algorithmus,der die A-posteriori-Wahrscheinlichkeitsdichte des Zustands des Systems aufgrund dereingegangenen Messungen nähert. Eine neue Technik für die Gewichtung der Stich-proben basierend auf dem MUSIC-Algorithmus wird vorgestellt.

Die vorgestellten Konzepte und Algorithmen werden an einer Anzahl von Testfällenvalidiert.

i

Abstract

Parameter estimation deals with the problem of determining the state of a given systemthrough indirect measurements by sensors. Among the abundance of methods in use,the multiple signal classification (MUSIC) algorithm is considered as one of the mostsuccessful ones in various areas, like magnetoencephalography, electroencephalography,radar or wireless communication. The algorithm is able to search the operating volumewith a one source model and computes the similarity of the source subspace to an esti-mated signal subspace. The source is assumed to be spatially static.

Most practical applications suffer from inaccuracies of the underlying physical model.These will disturb the parameter estimation even in the case of noiseless sensors. Thisthesis focuses on modeling and quantifying these parameter estimation errors in theasymptotic case. Therefore, the modeling errors are considered as random variables andexpectation and higher moments of the parameter estimation error are approximated.Existing first order approaches are augmented to near second order and the case of threedimensional parameter estimation is treated. The three dimensional case necessitatesthe use of derivatives of eigensystems and singular systems, for which closed-form solu-tions are provided. Arising problems in numerical complexity are treated in a separatechapter. The presented numerical simplifications make a calculation of asymptotic errorestimates in a feasible time possible in the first place.

As a minor contribution of this thesis, we investigate the case of a moving source in theoperating volume. Our method of choice is a sample based particle filter algorithm whichestimates the posterior probability density of the system state given the measurements.We propose a new technique of weighting the samples based on the MUSIC algorithm.

The presented concepts and algorithms are validated through a number of test cases.

ii

Contents

Zusammenfassung i

Abstract ii

Contents iii

List of figures vi

Acknowledgements viii

1 Introduction 1

2 Physical background 32.1 Electromagnetic source estimation . . . . . . . . . . . . . . . . . . . . . . 3

2.1.1 Magnetic induction . . . . . . . . . . . . . . . . . . . . . . . . . . . 32.1.2 Measurement of induced voltage . . . . . . . . . . . . . . . . . . . 62.1.3 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

2.2 Estimation of direction of arrival . . . . . . . . . . . . . . . . . . . . . . . 82.3 Systematic noise . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

3 The MUSIC Algorithm 123.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123.2 Scalar-valued problem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133.3 Vector-valued problem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

4 Derivatives of eigenvalues and eigenvectors 174.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 174.2 Algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

4.2.1 The method of Lee and Jung . . . . . . . . . . . . . . . . . . . . . 184.2.2 Extension to arbitrary derivatives . . . . . . . . . . . . . . . . . . . 18

5 Derivatives of singular values and singular vectors 215.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 215.2 Proposed method . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

5.2.1 Distinct singular values . . . . . . . . . . . . . . . . . . . . . . . . 225.2.2 Closed-form expressions of the derivatives . . . . . . . . . . . . . . 235.2.3 Repeated singular values . . . . . . . . . . . . . . . . . . . . . . . . 255.2.4 Example of repeated singular values with repeated derivatives . . . 28

iii

Contents

6 Derivatives of 3D MUSIC 30

7 Expectation of estimation error 327.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 327.2 Scalar valued x . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

7.2.1 Estimation error as ratio of quadratic forms . . . . . . . . . . . . . 327.2.2 Moments of quadratic forms . . . . . . . . . . . . . . . . . . . . . . 337.2.3 Extension to higher order estimates . . . . . . . . . . . . . . . . . . 35

7.3 Vector-valued x . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 377.3.1 Estimation error as ratio of quadratic forms . . . . . . . . . . . . . 377.3.2 First and second order expansion . . . . . . . . . . . . . . . . . . . 397.3.3 Extension to higher order estimates . . . . . . . . . . . . . . . . . . 407.3.4 Higher order Moments . . . . . . . . . . . . . . . . . . . . . . . . . 417.3.5 Outlook and Numerical problems . . . . . . . . . . . . . . . . . . . 42

8 Implementation 448.1 General techniques . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

8.1.1 Economic computation of the trace of a matrix product . . . . . . 448.1.2 Storage issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

8.2 Gaussian, zero-mean distributed h . . . . . . . . . . . . . . . . . . . . . . 468.2.1 Scalar h . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 468.2.2 Multivariate h . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 468.2.3 Nonzero entries of the Gaussian covariance matrix . . . . . . . . . 478.2.4 Summary and computational effort . . . . . . . . . . . . . . . . . . 488.2.5 Critical simplification step . . . . . . . . . . . . . . . . . . . . . . . 49

9 Moving source: tracking via sequential Bayesian filtering 519.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 519.2 Particle filtering . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

10 Simulations 5710.1 Trace calculation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

10.1.1 Convergence rate of truncated trace calculation . . . . . . . . . . . 5710.2 Moments of localization error . . . . . . . . . . . . . . . . . . . . . . . . . 60

10.2.1 1D direction of arrival estimation . . . . . . . . . . . . . . . . . . . 6010.2.2 3D parameter estimation problem . . . . . . . . . . . . . . . . . . . 6310.2.3 Higher order moments . . . . . . . . . . . . . . . . . . . . . . . . . 68

10.3 Particle filters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

11 Conclusion and Outlook 75

A Appendix 77A.1 Multi-index notation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77A.2 Example of operator vec . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77A.3 Repeated singular values . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

iv

Contents

A.4 Closed form of the inverse of a 3× 3-matrix . . . . . . . . . . . . . . . . . 81A.5 Quadratic forms ratio expectation . . . . . . . . . . . . . . . . . . . . . . . 81

A.5.1 Kronecker product calculus . . . . . . . . . . . . . . . . . . . . . . 81A.5.2 Proof of (7.7) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

A.6 Nonzero entries of the covariance matrix . . . . . . . . . . . . . . . . . . . 83A.6.1 Examples for possible configurations . . . . . . . . . . . . . . . . . 83A.6.2 Examples of the covariance matrix R . . . . . . . . . . . . . . . . . 84A.6.3 An algorithm to calculate all possible permutations of a given set . 84

A.7 Particle filter algorithms . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

v

List of Figures

2.1 Left: direct solution of magnetic flux equation. Right: magnetic momentapproximation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

2.2 Equivalent circuit of simple measurement setup . . . . . . . . . . . . . . . 72.3 Left: polar coordinates. Right: EM plane wave impinging at origin . . . . 92.4 Systematic errors in the case of distorted sensors . . . . . . . . . . . . . . 11

3.1 Example of principal angles in three dimensions. Left: two two-dimensionalsubspaces. The principal angles are designated by α and β. β = 0 sincethe two subspaces share one dimension. Right: one one-dimensional andone two-dimensional subspace. The principal angle is the actual anglebetween vector and plane. . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

9.1 Illustration of SIR algorithm. From left to right: initial state and particledistribution, state and particle update, particle weighting, resamplingstage, particle 2 is abandoned, therefore particle 3 is duplicated. . . . . . 55

10.1 Number of permutations and associated number of indices. . . . . . . . . 5810.2 Effects of truncated calculation 1 . . . . . . . . . . . . . . . . . . . . . . . 5910.3 Effects of truncated calculation 2 . . . . . . . . . . . . . . . . . . . . . . . 6010.4 Sensor position and source angles . . . . . . . . . . . . . . . . . . . . . . 6110.5 Left: Mean error and estimated first moment of DOA estimation for

different incident angles for source 2. Right: absolute residual . . . . . . . 6210.6 Left: Mean error and estimated first moment of DOA estimation for

different systematic noise. Right: absolute residual . . . . . . . . . . . . . 6310.7 Sensor and source position. . . . . . . . . . . . . . . . . . . . . . . . . . . 6410.8 Left: Norm of sample mean and estimated mean of quadratic form for

one source position over all systematic errors for 31 parameters. Right:absolute value of the residual. . . . . . . . . . . . . . . . . . . . . . . . . 65

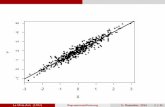

10.9 Left: Norm of sample mean and estimated mean of quadratic form overall source positions for 31 parameters, σ = 0.01. Right: absolute value ofthe residual. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

10.10 Left: norm of sample mean error and estimation of mean error over allsource positions for 31 parameters, σ = 0.01. Right: absolute value ofthe residual. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

10.11 Left: Norm of sample mean error and estimation of mean error for onesource position over all systematic errors for 31 parameters. Right: abso-lute value of the residual. . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

vi

List of Figures

10.12 Sample mean error and estimation of mean error over all positions for 62parameters. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

10.13 Sample mean error and estimation of mean error over all systematic errorsfor 62 parameters. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

10.14 Sample mean squared error and estimated second moment over all posi-tions for 31 parameters. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

10.15 Sample mean squared error and estimated second moment with 31 pa-rameters for near and far field estimation. . . . . . . . . . . . . . . . . . . 70

10.16 Marker path in noiseless case . . . . . . . . . . . . . . . . . . . . . . . . . 7110.17 Left: sample of true and estimated source path for σp = 0.003, σm =

0.3. Marker path (blue) and estimated paths on top. Euclidean distancebetween source and particle mean at the bottom. Right: sample forσp = 0.003, σm = 1.5. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

10.18 Left: true and estimated source path sample for σp = 0.008, σm = 0.3.Marker path (blue) and estimated paths on top. Euclidean distancebetween source and particle mean at the bottom. Right: sample forσp = 0.008, σm = 2. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

10.19 Left: mean distance of all timesteps and samples for tested process andmeasurement noise. Right: mean distance for a random walk state function. 73

A.1 Sparsity pattern of R . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

vii

Acknowledgements

First and foremost, I would like to thank my supervisor Prof. Eberhard Bänsch forhis support, encouragement, guidance and contributions throughout the entire courseof my postgraduate studies. I would also like to thank my co-referee Prof. BarbaraKaltenbacher for her comments and remarks on the final version of this work.

At Siemens AG Erlangen, I would like to thank Dr. Johannes Reinschke for introducingme to a fascinating topic and providing me funding, background, contacts and advicefrom the beginning to well past the time of direct collaboration. Dr. Reinschke providedme with resources and access to laboratory equipment and experimental data whichformed the groundwork of this thesis. I thank Mario Bechtold, Dirk Hiebel and Dr.Wolfgang Schmid for their support and practical and theoretical advice. I would like tothank Dr. Alexander Juloski for bringing the field of Bayesian estimation to my interest.

I thank Dr. Nicolass Neuß, Dr. Dmitri Kuzmin and Dr. Cornelia Scheider of theUniversity Erlangen-Nürnberg for their mathematical advice, feedback and clarificationson my notation.

I thank the whole research group of the chair of Applied Mathematics III, too many toname here, for helping to make the daily routine both productive and a pleasure.

In the end, my thanks go to my family, my friends and my love. For without them, thiswork would not have come to live.

Erlangen, September 2012

viii

1. Introduction

Parameter estimation problems arise in many fields of research and applications. Typ-ically, the user wants to achieve information on the state of a system given indirectmeasurements by sensors. The usually nonlinear dependence of the sensor data on thesystem state necessitates the use of fitting techniques. The mathematical problem ofparameter estimation is twofold.

• On one hand, a physical model is required to forecast sensor data given the systemparameters are known. This is commonly referred to as the forward problem orforward solution. We consider two applications in this thesis. The main focus is onestimating position and orientation of active electromagnetic (EM) point sourcesin the near-field of the sensors. The signal of the sources can be considered toreach the sensors instantaneously. Therefore, the location of the sources must bedetermined from the spatial diversity of the signal intensity across the sensors. As asecond application, we consider the direction of arrival (DOA) estimation problemarising in many applications, e.g. in radar or wireless communication. The sourceslie in the far-field of the sensors such that the signal intensity does not containenough information. Here, time disparity of the arriving signals is the source ofutilizable information.

• On the other hand, once a feasible forward solution is found, the inverse problemof inferring the parameters of the system given potentially noisy senor data arises.Using common simplifications, the two mentioned applications will have forwardsolutions which share critical characteristics. This enables the use of the samealgorithms for both with only slight modifications. The multiple signal classificationalgorithm (MUSIC) uses the spectral properties of the forward solution to scanthe operating volume. Although generally suboptimal, the MUSIC algorithm hasthe advantage of searching for one source at a time, compared to multi-source fitnecessary in a least-squares solution.

This thesis concentrates on the investigation of modeling errors. In all applications, theassumptions on the forward solution are inevitably met only to a certain extent. Thesesources of error can be unknown influences on the sensor data, construction inaccuraciesor model parameters known only within tolerances. This leads to a discrepancy betweenforward solution and observed data even in the noiseless case and perfectly known sys-tem state, which will lead to a erroneous inverse solution. Analytical expressions forthe expected error and second order moment of the state estimation are given in theasymptotic case. Existing error estimates concentrate largely on one dimensional prob-lems and employ first order approximations in one or several stages of the derivation. In

1

1. Introduction

this work, these estimates are extended to potentially fully second order accuracy and tothe three-dimensional (3D) case. Arising numerical problems are treated thoroughly.

As a minor result, we examine the case of a moving EM source in space. Algorithmsused in this field are based on Bayesian inference, with the Kalman filter being a promi-nent member in the linear Gaussian case. A new weighting technique is proposed forthe applications mentioned above which improves performance in the case of low sensornoise.The remainder of this thesis is organized as following. In Chapter 2, we will present

the physical models of the considered applications and our model of systematic errors.Chapter 3 introduces the MUSIC algorithm in its one and three dimensional variants.Some preliminary work on derivatives of eigensystems and singular systems is done inChapters 4 to 5. A minor direct result of these chapters are derivatives of the 3D MUSICcost function, which are stated in Chapter 6. The main contribution of this work canbe found in Chapter 7, where we extend the existing asymptotic error estimates to nearsecond order and the 3D case. This 3D case introduces numerical problems which areaddressed in Chapter 8. We consider the problem of a moving source in Chapter 9 wherewe propose a new weighting technique for a common sample based algorithm. We showthe validity of the presented algorithms through some test cases in Chapter 10.

2

2. Physical background

We provide the physical background of the applications we consider in this work. Thefirst setup is a source coil emitting an EM field which induces voltages in receiving sensorcoils. The second application is a direction of arrival estimation problem where we useclassical simplifying assumptions.

2.1. Electromagnetic source estimation

2.1.1. Magnetic induction

The voltage usc induced in a closed filament wire sensor coil by a source coil with knowncurrent imc of angular frequency ω = 2πf0 can be modeled by means of the mutualinductance L between the two coils (see [1] pp. 256)

usc = Ldimc(t)

dt.

Given a alternating sinusoidal current imc = Imcejωt+φmc with known amplitude Imc and

phase φmc, the induced voltage is given by

usc = jωLimc(t). (2.1)

The mutual inductance is given through means of the magnetic flux Φ ([33] pp. 216)

L =Φ

imc(t),

whereΦ =

∫Asc

~B · d ~Asc (2.2)

is the normal component of the magnetic field ~B over the sensor coil area Asc and ~Bsatisfies the laws of magnetostatics (see [33] p. 180)

∇×B = µ0J, (2.3)∇ ·B = 0,

where J is the current density and µ0 is the permeability constant. The magnetic field~B(~x ) can be described through a vector potential A

~B = ∇×A (2.4)

3

2. Physical background

X

Xsource coil

sensor coil

~nsc

~nmc

~xsc

~xmc

~tmc

~x cmc

0X

source coil

sensor coil

~nsc

~m

~x cmc

~xsc

dAsc

0

Figure 2.1.: Left: direct solution of magnetic flux equation. Right: magnetic momentapproximation

which has the general form

A(~x) =µ0

4π

∫V

J(~x ′)

|~x− ~x ′|dx′ +∇Ψ(~x ), (2.5)

where J(~x ′) is the current density distributed over the volume V and Ψ(~x ) is an arbitraryscalar function. Ψ(~x ) can be specified by a gauge transformation, where in magnetostat-ics, the use of the Coulomb gauge ∇ · A = 0 is common. Combining (2.3), (2.4) and theCoulomb gauge leads to

∇(∇ ·A)−∇2A = µ0J, (2.6)∇2A = −µ0J (2.7)

The solution of (2.7) is (2.5) ([33] pp. 181) with Ψ constant, e.g.

A(~x ) =µ0

4π

∫V

J(~x ′)

|~x− ~x ′|dx′. (2.8)

If we consider the source coil as a thin wire, J(~x ′) = imcδ(~x′−~xmc)~t(~xmc) holds ([41] p.

279), where ~xmc is the position of the source coil wire and ~t is its tangent with∣∣~t ∣∣ = 1.

Later, we will use the so-called magnetic moment approximation but for the sake ofcompleteness, we show the magnetic flux resulting from directly integrating (2.2). Using(2.4) and (2.8) in (2.2) and Stokes’ theorem yields (see also [33] pp. 234)

Φ =

∫Asc

∇×A · d ~Asc

=

∮∂Asc

A · d~xsc

=µ0imc

4π

∮∂Asc

∮∂Amc

~t(~xmc)

|~xsc − ~xmc|dxmc · d~xsc. (2.9)

4

2. Physical background

The setup of the direct solution of the magnetic flux using the vector potential is illus-trated in Figure 2.1 left.Rather than (2.9), we will use a simplified model which is valid if the distance between

sensor and source coil is much greater than the radius of the source coil. We expand thedenominator in (2.8), where we shift the origin of the coordinate system in a way that~xmc is small, typically the center of the source coil ~x cmc. We denote the shifted valueswith superscript s and get by Taylor expansion (see [33] pp. 185 and [41] pp. 279.)

1

|~x ssc − ~x smc|=

1

|~x ssc|+~x ssc · ~x smc|~x ssc|

3 + · · · .

It can be shown that the first term of this expansion inserted in (2.8) is equal to zero.We define the magnetic moment ~m := 1

2

∫~x smc × J(~x smc)dx

smc. In the case of a planar

source coil with surface area Amc, the magnetic moment reduces to ([1] pp. 200)

~m = imcAmc ~nmc.

where Amc is the source coil area and ~nmc is its surface normal. The second term of theexpansion of the vector potential inserted in (2.8) yields

A(~x s) =µ0

4π

~m× ~x s

|~x s |,

A(~xsc) =µ0

4π

~m× (~xsc − ~x cmc)|~xsc − ~x cmc|

. (2.10)

Higher order elements of the expansion are neglected. Regarding (2.9), there are twopossible ways to calculate the magnetic flux Φ using the magnetic moment model (2.10).Either we can calculate the rotation of A(~x ) analytically and integrate over Asc or wecalculate the line integral over ∂Asc.The solution of ∇×A(~x ) using the magnetic moment approximation is given by

B(~x ) = ∇×A(~x ) =1

4π |~p |3

(3~p (~p · ~m)

|~p |2− ~m

)=

1

4π |~p |5(

3~p ~p T − |~p |2 I)

︸ ︷︷ ︸:=K(~x )

~m

= K(~x )~m, (2.11)

where ~p := ~x − ~x cmc and I is the identity. One can observe that (2.11) depends linearlyon ~m. This is the foundation of the MUSIC algorithm which we will present in Chapter3. We write (2.10) in a similar form which emphasizes the linear dependency on ~m:

A(~x ) =1

4π |~p |3

0 ~p3 −~p2

−~p3 0 ~p1

~p2 −~p1 0

︸ ︷︷ ︸

=:K(~x)

~m,

= K(~x ) ~m, (2.12)

5

2. Physical background

where ~pi, i = [1, 2, 3], denote the components of ~p = ~x− ~x cmc.The integral (2.9) can be solved with a parameterization ξsc(ϕsc), ϕsc ∈ [0, a]:

Φ =

∮∂Asc

A · d~xsc

=

a∫0

A(ξsc(ϕsc)) · ξsc(ϕsc)dϕsc =

a∫0

ξTsc(ϕsc)K(ξsc(ϕsc))~mdϕsc

=

a∫0

ξTsc(ϕsc)K(ξsc(ϕsc)) dϕsc ~m. (2.13)

Further simplifications are possible if the radius of the sensor coils is sufficiently small.In this case, the surface integral, which results from using (2.11) in the first line of (2.9)can be approximated by the magnetic flux in the center of the coil times the area of thecoil. This results in

Φ =

∫Asc

∇×A · d ~Asc

=

∫Asc

K(~x )~m · d ~Asc

=

∫Asc

[K(~x )~m

]· ~nscdAsc

=

∫Asc

~nTscK(~x ) dAsc ~m (2.14)

≈ Asc~nTscK(~x csc)~m, (2.15)

where ~x csc denotes the center of the source coil. Figure 2.1 right illustrates (2.14). (2.14)gives the same result as (2.13) but has the disadvantage that a surface integral has to besolved numerically. (2.15) is a straightforward approximation which gives an analyticalsolution.

2.1.2. Measurement of induced voltage

In an actual application, it is not possible to measure the voltage induced by the sourcein a sensor coil directly. Measurement devices, parasitic currents and self inductance ofthe sensor coil will influence the measurements. We show one possibility to model thesource-sensor measurement setup in Figure 2.2. The influence of cables is neglected. Thesensor coil is characterized by resistance Rs, capacitance Cs and self inductance Ls. Themeasurement device is characterized through its resistance Rm. The source coil influenceis modeled through the mutual inductance L, where L can be calculated as described

6

2. Physical background

source sensor measurement

Ls

Rs

CsL Rm

imc

umeas

Figure 2.2.: Equivalent circuit of simple measurement setup

above. With Kirchhoff’s circuit laws, the measured voltage umeas is given by

umeas =jωRm

Rs +Rm + jω Ls + jω CRmRs − ω2LsCRmi(t)L(xmc).

As can be seen, all measurement-related factors can be summarized in a linear factor l :=jωRm

Rs+Rm+jω Ls+jω CRmRs−ω2LsCRm, which may vary from sensor to sensor. The measured

voltage is then given byumeas = l i(t)L(xmc).

Further real world parameters such as the number of windings of the coils are neglectedhere but could easily be incorporated in l.

2.1.3. Summary

Overall, we presented four different possible calculations of the magnetic flux. (2.9) isthe derivation of the magnetic flux through Faraday’s law of induction. The magneticmoment approximation of the vector potential A results in two equivalent possibilities tocalculate the magnetic flux (2.13) and (2.14), where (2.13) requires the numerical calcula-tion of one integral while (2.14) requires two. (2.14) gives the possibility to approximatethe integral through the sensor surface (2.15), which may be sufficient in certain appli-cations.We only measure the effective value ueff = Re(um)√

2of the induced voltage, so all param-

eters can be considered to be real-valued. Considering n sensors and p measurements,the measurements are taken over time as U = [ueff (t1), . . . , ueff (tp)] for one sensorand are concatenated in y(t) =

[UT1 , . . . , U

Tn

]T ∈ Rn×p. Using the magnetic momentapproximation, the measurements of sensor i and the source are connected through

yi(t) = a(xmc)i ωmc(t), (2.16)

where ωmc(t) = nmci(t) ∈ R3×p is the normed source orientation, which is defined asthe surface normal of the source coil times the time series of current. In the following,this will be referred to as the orientation of the source. Although not rigorously correct,this notation has a nice geometrical interpretation. Given n sensors, we define a(xmc) :=[a(xmc)

T1 , . . . , a(xmc)

Tn

]T ∈ Rn×3 with the i−th row corresponding to the i−th sensor

7

2. Physical background

and

a(xmc)i =

12

√2 Re(kiAmc)

ai∫0

ξTsc,i(ϕsc)K(ξsc,i(ϕsc))dϕsc,

12

√2 Re(kiAmc)

∫Asc,i

~nTsc,i K(~x ) dAsc,i,

12

√2 Re(kiAmc)Asc,i~n

Tsc,i K(~xmc), i = 1, . . . , n,

depending on which calculation method of the magnetic flux is chosen. All linear param-eters are summarized in a parameter ki for each sensors, called the measurement gain.In the case of m sources, we can simply concatenate the influence of the sources and getthe final expression of the forward solution:

y(t) =[a(xmc,1), . . . , a(xmc,m)

]︸ ︷︷ ︸=:A(x)

ωmc,1(t)...

ωmc,m(t)

= A(x)ω(t), (2.17)

where A(x) is the so-called lead-field matrix.In this model, we do not consider mutual coupling between sensors. A sensor coil with

nonzero current will itself emit an EM field and therefore the sensors will influence eachother. This would turn (2.17) into a system of equations M(x)y(t) = A(x)ω(t), withM(x) ∈ Rn×n describing the mutual inductance between source and sensors and sensorsamong each other. With the sensors not changing position, M(x) is constant except onthe diagonal. It is easy to see that this system of linear equations can be reduced tothe form (2.17). Therefore, and because the influence of mutual inductance is typicallynegligible, we do not model mutual inductance between sensors.

2.2. Estimation of direction of arrival

Estimation of direction of arrival (DOA) of a signal is a classical problem in signalprocessing with applications in radar [53] or wireless communication [69],[26]. We assumean EM point source in the far field characterized in polar coordinates through (θ, φ) emitsan electromagnetic wave, see Figure 2.3 left. The wave is impinging at n sensors locatedat positions rk, k = 1, . . . , n. The spatio-temporal wave field Ψ(r, t) propagates accordingto the wave equation ([33] Chapter 6 and [37] for a derivation from Maxwell’s equations)(

∇2 − 1

c2

∂2

∂2t

)Ψ(r, t) = 0

where ∇2 is the spatial Laplacian operator and c is the velocity of propagation in themedium.There are several common assumptions consistent with applications which will result

in a simplified model. We refer to the work of Doron [18] and Belloni [11]. The firstassumption is φ = π, i.e. we consider a one-dimensional DOA problem in the xy-plane.

8

2. Physical background

θ

φ

EM source

x

y

z

x

y

d

sensor

impingingplane wave

rk γkθs

rs

Figure 2.3.: Left: polar coordinates. Right: EM plane wave impinging at origin

The sensors are considered isotropic, i.e. the radiation pattern is unity for every EMsource direction of each sensor. We assume that the source characterized by rs, ‖rs‖ = 1,or equivalently by θs, lies in the far-field of the sensors so that the signal received bythe sensors can be considered as a plane wave. The source signal s(t) is considerednarrowband, i.e. has a small bandwidth compared to the carrier frequency ωc = 2π

λ ,where λ = c

f is the wavelength, f is the signal frequency and c is the propagation of thewave in the medium. At the origin, the signal wave W (r, t) has the form

W (0, t) = s(t)ejωct.

The origin is usually placed in the centroid of the sensors. Under these assumptions,the signal received by sensor k is then time-shifted by the distance between the sensorand the wave front through the origin ([26] and [16]), see Figure 2.3 right. The signeddistance d between sensor and plane wave front through the origin is given by d = rk · rsor equivalently by d = ‖rk‖ cos(θs − γk), where θs, γk describe the incoming wave andsensor position angle, respectively. d is positive if the sensor is on the side of the planewave with positive rs direction and negative otherwise. The time-shifted signal is givenby ([40],[59])

W (θs, t) = s (t+ ‖rk‖ cos(θs − γk)) ejωc(t+‖rk‖ cos(θs−γk)).

Due to the narrowband assumption, we can assume s(t+ ‖rk‖ cos(θs − γk)) ≈ s(t) andthe sensor data is finally given by

W (θs, t) =[s(t) ejωct

]ejωc‖rk‖ cos(θs−γk).

Given n sensors located at (r1, . . . , rn), we can define

a(θs) =[ejωc‖r1‖ cos(θs−γ1), . . . , ejωc‖rm‖ cos(θs−γn)

]T,

9

2. Physical background

and assuming m signal sources impinging at x = (θ1, . . . , θm), we obtain a forwardsolution

y(t) =[a(θ1), . . . , a(θm)

]︸ ︷︷ ︸=:A(θ)

ejωct s1(t)

...ejωct sm(t)

︸ ︷︷ ︸

=:ω(t)

= A(x)ω(t), (2.18)

where A is again referred to as the lead-field matrix. As one can see, this forward solutionhas the same properties as (2.17) and is used frequently in DOA estimation problems([62], [61], [74]).This combination of linear (for orientation/time series) and nonlinear (for position/DOA)

dependence of measurements on signal parameters as illustrated in (2.17) and (2.18) canbe found in various other applications such as electroencephalography (EEG) and magne-toencephalography (MEG) ([8]) or thunderstorm localization ([47]). It is the foundationof the MUSIC algorithm introduced in Chapter 3.

2.3. Systematic noise

The models introduced in the last two sections will inevitably match the conditions in anapplication imperfectly. Sources of errors can be of very different nature. First of all, theassumptions required to achieve a nonlinear/linear distinction of the parameters shownin the last two sections may be violated in a substantial way. Furthermore, unknownEM sources may be present. Figure 2.4 shows an example where sensor positions arenot known exactly. Due to the distorted sensor positions, we have to expect erroneousparameter estimation even in the case of noise-free measurements. We will not treat thecases in which the physical model itself is not valid or unknown parameters influence thesensor data. To preserve tractability, we assume the model is correct but its parametersmay not. These errors will be called systematic errors or modeling errors. We model theerroneous forward solution in a very general sense as

y(t, h) = A(x, h)ω(t),

i.e., the systematic error is treated through the lead-field matrix. Of course, we haveA(x, 0) = A(x) if the model has no systematic error. The influence of h on A dependson the given problem.As an example, we consider the 3D estimation problem with erroneous sensor positions

and lead-field matrix as (2.15). In this case, we have hi := [hki ;~hpi ;

~hoi ] ∈ R7 for one senor,

10

2. Physical background

expected sensor data y(t, 0)

measured sensor data y(t, h)

inverse solution

true state x

estimated state x

real sensorexpected sensor

Figure 2.4.: Systematic errors in the case of distorted sensors

h := [hT1 , . . . , hTm]T and

a(xmc, hi)i =1

2

√2 Re((ki + hki )Amc)Asc(~nsc + ~hoi )

T K(~xmc + ~hpi ),

a(xmc, h) =[a(xmc, h1)T1 , . . . , a(xmc, hn)Tn

]T,

y(t, h) =[a(xmc,1, h), . . . , a(xmc,m, h)

] ωmc,1(t)...

ωmc,m(t)

,y(t, h) = A(x, h)ω(t).

We restrict ourselves to h ∈ Rk in this work, with k ∈ N depending on the problem.

11

3. The MUSIC Algorithm

3.1. Introduction

The MUSIC algorithm is a long known and successful tool in parameter estimation. Toour knowledge, Schmidt [62] was the first to describe the MUSIC cost function. Mosherand Leahy introduced MUSIC in the field of EEG and MEG [50] (see also [47]). MEGand EEG brain imaging is a vivid field of research and numerous variants of the algo-rithm were introduced since then. Mosher and Leahy proposed an enhanced recursivelyapplied (RAP-) MUSIC [48], [49], which is designed to treat difficult to resolve multiplesources and correlated sources. [17] introduced a hybrid algorithm which combines theglobal optimization technique space mapping (SM) with RAP-MUSIC. [31] suggested aprecorrelated and orthogonally projected (POP-) MUSIC approach for a better resolu-tion of correlated sources. [52] and [2] suggested the use of fourth order (FO) momentsof the acquired sample covariance matrix which resulted in the FO-MUSIC algorithm.In the radar/wireless communication field, some specialized algorithms were invented.ROOT-MUSIC exploits the resulting Vandermonde structure of the signal gain in thecase of a uniform linear array of sensors [9]. If the sensors are align such that a subar-ray of sensors is just a translation of another subarray, the ESPRIT algorithm utilizesthe transational invariance of the signal gain [61]. Variants for a uniform circular array(UCA) are introduced in [10].Algorithms in the field of EEG/MEG can roughly be divided into two categories. MU-

SIC is considered to belong to the class of parametric algorithms, which provide a costfunction which can be used in an optimization algorithm [8]. Examples of parametricalgorithms besides MUSIC or its variants are Least-Squares Estimation [8] and Beam-forming [72]. The other class of approaches, called imaging algorithms, uses a predefinedgrid on which a global solution is sought. Examples are LORETA [58] and FOCUSS [28],[29].Throughout all the variants of MUSIC, the basic approach and cost function used by

Schmidt remained unchanged in its core, though. We will give the derivation and theideas of Schmidt’s approach and the case of scalar linear parameters in Section 3.2. InSection 3.3, we will treat the case of vector-valued linear parameters. In the aforemen-tioned literature, this case was handled rather sloppily. Therefore, we will provide a morein-depth review of the necessary concept of principal angles.We consider the problem of estimating the state parameters of a known number of m

sources emitting signals measured by n sensors, where m < n. The MUSIC algorithmutilizes the special form of the forward solution (2.17) and (2.18)

y(t) = A(x)ω(t) + n(t)

12

3. The MUSIC Algorithm

derived in Sections 2.1.3 and 2.2 with additive noise n(t), where y ∈ Rn, A ∈ Rn×k andω ∈ Rk. We consider the columns of A(x) = [a1(x), . . . , ak(x)] as linearly independentto ensure the uniqueness of the solution, where k depends on the number of sourcesand the dimension of the linear parameters. We assume A,ω to be real-valued. This isjustified by Section 2.1.3 in the 3D parameter estimation case. In the 1D case, we haveA,ω complex, but we restrict ourself to the real case. A derivation of the MUSIC costfunction in the complex case is a straightforward extension which can be found in variouspublications mentioned above (see [62],[21]).The noise is assumed to be temporally and spacially uncorrelated Gaussian, zero mean

with covariance E[n(t)n(t)T

]= σ2 In. The signal of two different sources is assumed to

be uncorrelated, as well as we assume signal and noise to be uncorrelated. First, we willdescribe a one dimensional approach as introduced in the DOA estimation problem inSection 2.2, in which the position of one source can be described through one parameter,i.e. k = m and ω =

[ωT1 , . . . , ω

Tm

]T with scalar ωi, i = 1, . . . ,m. Later, we extend thisapproach to a fully three dimensional case as shown in Section 2.1.3, i.e. ωi ∈ R3 andk = 3m, which will require the use of principal angles.

3.2. Scalar-valued problem

The asymptotic autocorrelation of y(t) is given by

R = E[y(t)y(t)T

]= A(x)ω(t)ω(t)T︸ ︷︷ ︸

=:P

A(x)T + E[A(x)ω(t)n(t)T

]+ E

[n(t)ω(t)TA(x)T

]+E

[n(t)n(t)T

]= A(x)PA(x)T + σ2 In, (3.1)

where we used the zero mean assumption of the noise. We have rank(R) = n andrank(P ) = m, since we assumed the sources to be uncorrelated with the noise andamong each other. When sorted in descending order of magnitude, the eigenvalues λi,i = 1, . . . , n of R satisfy λ1 ≥ λ2 ≥ . . . ≥ λm > λm+1 = . . . = λn = σ2, that is, theeigenvalues of APAT are shifted by the noise variance σ2.The MUSIC algorithm exploits this distribution of eigenvalues to split the space spannedby R into so-called signal- and noise-subspace through eigendecomposition

R = A(x)PAT (x) + σ2I

=[Φs Φn

] [Λ + σ2I 00 σ2I

] [Φs Φn

]T= ΦsΛsΦ

Ts + ΦnΛnΦT

n ,

where Λ contains the eigenvalues of P , Λs = Λ + σ2I, Λn = σ2I and the spans ofthe matrices Φs ∈ Rn×m and Φn ∈ Rn×n−m are the signal and the noise subspaces,

13

3. The MUSIC Algorithm

respectively. Since R is symmetric and of full rank, the signal and noise eigenvectorsform an orthonormal basis of Rn. We define the MUSIC projector as

Π := ΦsΦTs . (3.2)

With Π being an orthonormal projection, obviously Π = I − ΦnΦTn also holds. If m is

known, this partitioning is straightforward. In the case of m being unknown, a distinctdrop in the magnitude of the eigenvalues of R can be used as an indicator of the amountof sources [50]. The MUSIC projector can be used to determine the similarity betweenthe signal space spanned by the received signal and the space spanned by an estimatedsource position x. The MUSIC cost function takes the form

f(x) =a(x)TΠa(x)

a(x)Ta(x), (3.3)

where a(x) is a column of the lead-field matrix. (3.3) can be interpreted as a normalizedprojection of a(x) on the space spanned by Π followed by a normalized scalar productbetween a(x)T and the projected vector. Since the scalar product of two normalizedvectors u and v can be interpreted as the cosine of the angle between u and v, we seethat (3.3) will be 1 if a(x) lies entirely in the space spanned by Π and 0 if a(x) isorthogonal to Π. The range of f(x) is [0, 1]. The lower bound is a direct consequenceof the quadratic nature of f(x), the upper bound can easily be derived through the sub-multiplicative property of the 2-norm.As a side note, the following equations also hold trivially:

a(x)TΠa(x)

a(x)Ta(x)=

a(x)T (I − ΦnΦTn )a(x)

a(x)Ta(x)= 1− a(x)TΦnΦT

na(x)

a(x)Ta(x).

Since a(x) and a(y) are linearly independent for x 6= y by assumption, f(x) = 1 if andonly if x is an actual source position. A slightly different cost function was introducedby Ferréol in [21] with

f(x) = a(x)TΠFa(x), (3.4)ΠF = I −A(x)A(x)+ = I − y(t)y(t)+,

where (·)+ denotes the Moore-Penrose pseudo-inverse and x is the actual source position.This cost function attains its minima at true source positions and has the advantage ofhaving a simple derivative, which we will use in Section 10.2.1.In an application, we will not have the autocorrelation R, but rather an approximation

R =1

N

N∑i=1

y(ti)y(ti)T

based on N measurements. This will lead to approximations of signal and noise sub-spaces Φs and Φn. We therefore have to seek source positions which will give the bestprojection into the space spanned by Φs or, equivalently, are nearly orthogonal to Φn.

14

3. The MUSIC Algorithm

The approximated cost function f(x) = a(x)T Πa(x)a(x)a(x)T

can be used as the cost function in anoptimization routine.Note that f(x) does not depend on the signal orientation ω(t), therefore the space the

optimizer has to operate on is noticeably reduced. Once a feasible minimum of x :=argmin

xf(x) is found, the remaining unknown parameters can be determined through

([50])ω(t) = A(x)+y(t).

In the presence of systematic noise h 6= 0, the sensor data is distorted as y(t, h) =A(x, h)ω(t) and therefore the distorted projection matrix is given by ΠF (h) = I −y(t, h)y(t, h)+. Assuming the asymptotic case, we have to solve the perturbed cost func-tion

f(x, h) = a(x)TΠF (h)a(x). (3.5)

3.3. Vector-valued problem

We now consider a 3-dimensional approach. In this case, a(x) ∈ Rn×3, rank(P ) = mholds and we cannot use a simple projection like (3.3). We will compare the subspacespanned by the forward solution at an estimated source position and the signal subspaceΠ by means of principal angles.

Principal angles: definition

We use the definition of principal angles given in [27]. Let F and G be subspaces in Rrwhose dimensions satisfy q = dim(F ) ≥ dim(G) = p ≥ 1.The principal angles Θ1, . . . ,Θp ∈ [0, π/2] are defined recursively by

cos(Θk) = maxu∈F

maxv∈G

uT v =: uTk vk,

subject to

‖u‖2 = ‖v‖2 = 1,

uTui = 0, i = 1, . . . , k − 1

vT vi = 0, i = 1, . . . , k − 1,

where ui, vi describe the principal vectors belonging to the principal angles.This definition is somewhat cumbersome. A simple calculation method is available,

though.

Principal angles: calculation

We assume the bases of the subspaces in question are given as matrices. Let A ∈Rr×p and B ∈ Rr×q be of full column rank and 0 < p ≤ q ≤ r. [27] describes aHouseholder orthogonalization to obtain orthogonal bases of A,B. We use singular value

15

3. The MUSIC Algorithm

α

β = 0

α

Figure 3.1.: Example of principal angles in three dimensions. Left: two two-dimensionalsubspaces. The principal angles are designated by α and β. β = 0 sincethe two subspaces share one dimension. Right: one one-dimensional and onetwo-dimensional subspace. The principal angle is the actual angle betweenvector and plane.

decomposition (SVD) as proposed in [12]. Assume UA ∈ Rr×p and UB ∈ Rr×q areorthonormal bases of the spaces spanned by A and B obtained through SVD, e.g. UA,Bare the right singular vectors. We investigate the singular value decomposition in moredetail in Section 5.1. We define

M := UTAUB ∈ Rp×q

and its singular value decomposition M = UMSMVTM , SM = diag(σ1, . . . , σp). If σ1 ≥

. . . ≥ σp, then the principal angles are given by

cos(Θk) = σk.

The principal angles Θ1, . . . ,Θp reflect the similarities between the subspaces spannedby A and B in Rr. If r = 3, the principal angles are actually the angles between thesubspaces as illustrated in Figure 3.1.To achieve a quadratic form as in (3.3), we write the squared principal angles as

f(x) = λmax(UA(x)TΠUA(x)

)(3.6)

with λmax denoting the maximum eigenvalue of the enclosed expression and UA(x) theleft singular values of the lead-field matrix A(x). (3.6) calculates the square of the cosineof the maximum principal angle. Once again, the assumption of linear independence ofthe 3m columns of A ensures f(x) = 1 if and only if x is an actual source position.In the presence of systematic noise, the cost function is constructed akin to (3.5) as

f(x, h) = λmax(UA(x)TΠ(h)UA(x)

). (3.7)

In the following two chapters, we will lay the foundation of derivatives of (3.6) and (3.7)with respect to x and h.

16

4. Derivatives of eigenvalues andeigenvectors

4.1. Introduction

In the next two chapters, we will lay the foundation of partial derivatives of the 3DMUSIC cost function. In the 1D case (3.4), derivatives w.r.t. x and h are straightforwardand known (see [21] and Section 10.2.1). To our knowledge, derivatives for the 3D caseare not stated in literature yet. Recalling (3.6), we see that derivatives of this MUSICcost function will require the derivative of a function of the form f(x) = λ(A(x)), whereλ denotes an eigenvalue of the matrix function A.

For the rest of this section we assume the matrix A(x) ∈ Rn×n, n ∈ N, is symmetric,depends continuously on x ∈ Rm, is sufficiently smooth and the derivatives of A withrespect to x are available up to any required order. The eigenvalues λ1(x), . . . , λn(x)and eigenvectors u1(x), . . . , un(x) of A are known point wise and the eigenvectors arenormalized as uTj uj = 1. We define an eigenvalue λi to be unique if λi 6= λj ∀x , i 6= j.Conversely, we define λi to be a repeated eigenvalue if ∃x : λi = λj , j 6= i. If we wantto analyze the behavior of the MUSIC algorithm, we will naturally have to investigateeigenvalue derivatives of symmetric matrices. As we will see later, calculation of higherorder eigenvalue derivatives will also require derivatives of the corresponding eigenvectors.A lot of research has been done investigating the existence of derivatives of eigensys-

tems. If A is an analytic function with distinct eigenvectors and x ∈ R1, there are wellknown proofs that also its eigenvalues and eigenvectors are analytic functions (see Rel-lich, [60] Chapter 1.1). This was proven to extend to the case of repeated eigenvaluesif A is hermitian [4]. Unfortunately, for x ∈ Rm>1, there are simple examples in whichthe eigenvalues and eigenvectors are not differentiable, although directional derivativesare known to exist [67]. Despite this result, our experience has shown that our proposedalgorithm gives valid information on the derivatives of the MUSIC cost function.To our knowledge, Nelson [51] was among the first to propose a simple algorithm to

compute the derivatives of eigenvalues and eigenvectors. Mills-Curan [46] provided aprocedure in the case of repeated eigenvalues with distinct eigenvalue derivatives. An-drew and Tan [5] expanded this procedure to symmetric eigensystems with repeatedeigenvalues and repeated eigenvalue derivatives up to an order k. [71] gave an extensionto general complex-valued eigensystems for the same case of repeated eigenvalues. Itis interesting to note that the approach shown there is very similar to [75], which wewill use extensively in Chapter 5, where we treat the derivatives of singular vectors. Aniterative procedure for large matrices is presented in [6]. We will use and slightly expanda comparatively old approach of Lee and Jung [39], because of its nice features of being

17

4. Derivatives of eigenvalues and eigenvectors

numerically stable, easy to implement and easy to expand to higher derivatives. Forthe rest of this chapter, we will drop the dependency of all terms on x for notationalconvenience.

4.2. Algorithm

4.2.1. The method of Lee and Jung

We extend the approach of Lee and Jung [39] to calculate the derivative of a uniqueeigenvalue and its corresponding eigenvector. Consider the generalized eigensystem

Auj = λjMuj , (4.1)

where A is symmetric positive semi-definite and M is symmetric positive definite. λj isthe jth eigenvalue and uj is the corresponding eigenvector of A, where λj is assumed tobe unique. uj is normalized with respect to M as

uTj Muj = 1. (4.2)

Differentiating both (4.1) and (4.2) and rearranging leads to

(A− λjM)u′j −Mujλ′j = λjM

′uj −A′uj , (4.3)

u′jTMuj = −0.5uTj M

′uj , (4.4)

where (·)′ indicates the derivative with respect to a design parameter. Lee and Jungwrite equations (4.3) and (4.4) as a single matrix equation[

A− λjM −Muj−uTj M 0

] [u′jλ′j

]=

[(λjM

′ −A′)uj0.5uTj M

′uj

]. (4.5)

It can be shown ([39]) that the left hand side of (4.5) is of full rank if λj is unique

(including the case λj = 0, λk 6= 0, k 6= j). Solving (4.5) for[u′jT , λ′j

]Tgives the desired

derivatives of the eigenvalues and eigenvectors. LR-decomposition for the matrix on theleft-hand side can be used to minimize computational effort. In our application, we haveM = I and M ′ = 0.

4.2.2. Extension to arbitrary derivatives

The extension of (4.5) to derivatives of arbitrary order is straightforward. We are usingmulti-index notation as introduced in Appendix A.1 to describe higher order partialderivatives. We form the α-derivative of (4.1) and (4.2), rearrange the result in the sameway as (4.5) and get [

A− λjM −Muj−uTj M 0

][u

(α)j

λ(α)j

]=

[L1 − L2

L3

], (4.6)

18

4. Derivatives of eigenvalues and eigenvectors

with

L1 :=∑β<αβ 6=0

∑γ≤α−β

(α

β

)(α− βγ

)λ

(β)j M (α−β−γ)u

(γ)j + λj

∑γ<α

(α

γ

)M (α−γ)u

(γ)j , (4.7)

L2 :=∑β<α

(α

β

)A(α−β)u

(β)j , (4.8)

L3 :=∑β<αβ 6=0

∑γ≤α−β

(α

β

)(α− βγ

)u

(γ)j

TM (α−β−γ)u

(β)j +

∑γ<α

(α

γ

)u

(γ)j

TM (α−γ)uj , (4.9)

where for the multi-indexes α, β ∈ Nn0 we define β < α if ∃i : βi < αi, βj ≤ αj , j 6= i.Proof of (4.7) and (4.8):Forming the α-th derivative of (4.1) with the Leibniz rule we get

(Auj)(α) = (λjMuj)

(α),∑β≤α

(α

β

)A(α−β)u

(β)j =

∑β≤α

∑γ≤α−β

(α

β

)(α− βγ

)λ

(β)j M (α−β−γ)u

(γ)j . (4.10)

Rearranging the left and right side of (4.10), we get

left side = Au(α)j +

∑β<α

(α

β

)A(α−β)u

(β)j ,

right side = λ(α)j Muj +

∑β<α

∑γ≤α−β

(α

β

)(α− βγ

)λ

(β)j M (α−β−γ)u

(γ)j

= λ(α)j Muj +

∑β<αβ 6=0

∑γ≤α−β

(α

β

)(α− βγ

)λ

(β)j M (α−β−γ)u

(γ)j

+ λj∑γ≤α

(α

γ

)M (α−γ)u

(γ)j

= λ(α)j Muj +

∑β<αβ 6=0

∑γ≤α−β

(α

β

)(α− βγ

)λ

(β)j M (α−β−γ)u

(γ)j

+ λj∑γ<α

(α

γ

)M (α−γ)u

(γ)j + λjMu

(α)j .

These left and right sides form the first row of (4.6),Proof of (4.9):

19

4. Derivatives of eigenvalues and eigenvectors

Forming the α-th derivative of (4.2) we get

(uTj Muj)(α) = 1(α)∑

β≤α

∑γ≤α−β

(α

β

)(α− βγ

)u

(γ)j

TM (α−β−γ)u

(β)j = 0

∑β<αβ 6=0

∑γ≤α−β

(α

β

)(α− βγ

)u

(γ)j

TM (α−β−γ)u

(β)j

+∑γ<α

(α

γ

)u

(γ)j

TM (α−γ)uj = −2uTj Mu

(α)j ,

which proves (4.9).(4.6) has the nice feature that, regardless of the order of the derivative, the left side of thelinear system of equations remains unchanged. Therefore, the system is always solvable.Using LR-decomposition to reduce the computational load is a natural choice.

20

5. Derivatives of singular values andsingular vectors

5.1. Introduction

In the last chapter, we assumed the derivatives of a matrix A, of which we calculateits eigenvalue derivatives, are given up to arbitrary order. Regarding the MUSIC costfunction (3.6), we see that these derivatives will require the derivative of the left singularvectors of the lead-field matrix.Let A ∈ Rm×n,m, n ∈ N,m ≥ n and rank(A) = o ≤ n. The singular value decomposi-

tion (SVD) of A is a factorization

A(x) = USV T ,

where U ∈ Rm×m, V ∈ Rn×n are orthonormal matrices and S ∈ Rm×n, where diag(S) =(σ1, . . . , σo) with zeros filling the remaining diagonal and off-diagonal elements of S. σiis called a singular value and the columns of U and V build the left and right singu-lar vectors, respectively. The singular values are nonnegative by construction and aretypically arranged in descending order. We use the operator diag() akin to the built-inMatlab function diag which, given x ∈ Rm, generates an m×m diagonal matrix with theelements of x on the diagonal or, given X ∈ Rm×m, generates a vector x ∈ Rm consistingof the diagonal elements of X. Efficient numerical methods to compute the SVD arewell-known [27].Let A(x) depend smoothly on x ∈ R and let the derivatives of A with respect to x

be available up to any required order. The existence and non-uniqueness of an analyticsingular value decomposition (ASVD)

A(x) = U(x)S(x)V (x)T , (5.1)

where A,U, S and V are all analytic functions was first proven by Bunse-Gerstner et al.[14], along with an algorithm to calculate ASVD’s. [45], [34] and [75] proposed additionalalgorithms for computing the ASVD. Derivatives of singular values and vectors are notinvestigated on their own, but Wright [75] shows means to calculate these derivatives touse them in an explicit Runge-Kutta scheme. [55] explicitly investigates derivatives ofthe singular values but basically repeats the results of [75]. To our knowledge, there isno theory on the existence of singular value derivatives if A is not analytical, but, as inthe case of eigenvalue derivatives, our numerical experience shows that the algorithm wepresent in the following sections gives feasible results.

21

5. Derivatives of singular values and singular vectors

We use normalization of the singular vectors as

U(x)TU(x) = I, (5.2)

V (x)TV (x) = I. (5.3)

The first o left singular vectors, e.g. the first o columns of U are determined uniquely.The remaining columns of U are chosen in a way that U builds an orthonormal system ofRm, although they are not needed to build A. V is built analogously. The normalization(5.2) also holds if only the first o columns of U are considered, but, as it will becomeapparent, we also need the following normalization, which only holds if U and V aresquare orthonormal matrices:

U(x)U(x)T = I, (5.4)

V (x)V (x)T = I. (5.5)

Computing full U and V matrices for given x poses no problem since basically all numer-ical routines provide means to calculate a full SVD (often these routines are performedby default and the rectangular versions of U, V are called ’sparse’). For the rest of thischapter, as before, we will not indicate the dependence on x explicitly for ease of notation.

5.2. Proposed method

We adapt and extend the approach by Wright ([75]). The following section thereforelargely duplicates his work. Wright uses derivatives of singular values and vectors tobuild ASVD’s. The importance of this approach to compute singular value derivativesis not explored. Furthermore, only a non-defective quadratic A is examined explicitly.We expand this approach to rectangular matrices and provide closed-form expressions.These closed-form expressions allow the calculation of arbitrary derivatives in the sameform as (4.6). Furthermore, we examine the case σp = σq, σ′p = σ′q, σ′′p 6= σ′′q , p, q =1, . . . , o, p < q, where (·)′ indicates the derivative w.r.t. a design parameter as before.To our knowledge, this has not been treated yet in the existing literature.

5.2.1. Distinct singular values

We differentiate (5.1), (5.2) and (5.3):

A′ = U ′SV T + US′V T + USV ′T, (5.6)

0 = UTU ′ + U ′TU,

0 = V TV ′ + V ′TV.

By setting Z := UTU ′ and W := V TV ′ we get

Z + ZT = 0,

W +W T = 0,

22

5. Derivatives of singular values and singular vectors

thus Z and V are anti-symmetric and diag(Z) = diag(W ) = 0. With (5.4), (5.5), wehave U ′ = UZ and V ′ = VW and so, we now have to determine Z and W to calculatethe derivatives of the singular vectors. We set Q = UTA′V and restate (5.6) as

S′ = Q− ZS + SW. (5.7)

Since Z, V are anti-symmetric and S is diagonal, we have diag(S′) = diag(Q) and thus thederivatives of the singular values are already determined. Wright continues to calculatethe off-diagonal elements of Z and W element-wise using (5.7). W.l.o.g. we assumep < q, p = 1, . . . , o− 1, q = 2, . . . , o and get

σqZpq − σpWpq = Qpq, (5.8)−σpZpq + σqWpq = Qqp. (5.9)

Excluding the trivial case of either σp = 0 or σq = 0, these two equations can be used toobtain Zpq,Wqp:

Zpq =σpQqp + σqQpq

σ2q − σ2

p

(5.10)

Wqp =Qpk − σqZpq

σp. (5.11)

Clearly, these equations only hold for σq 6= σp, the case σq = σp will be examined insection 5.2.3. Since p, q ≤ o, several elements of Z,W still have to be determined. Letp = 1, . . . , o, k = o + 1, . . . ,m and l = o + 1, . . . , n. Then (5.7) gives information aboutthe remaining elements as

σpZkp = Qkp,σpWpl = −Qpl.

(5.12)

The remaining blocks of Zkl,Wkl, k, l > o, contain no information and are therefore setto zero, as proposed by Wright.

5.2.2. Closed-form expressions of the derivatives

The formulas (5.10), (5.11) and (5.12) of the previous section allow calculation of Z andW element-wise. It may be favorable to obtain a closed form for both. A completeclosed form is not possible. This becomes obvious considering (5.10), (5.11) and (5.12).For n 6= o 6= m, several cases for the calculation of Z and W have to be distinguished(generally to avoid dividing by 0). However, for each of these separate cases, there areclosed-form expressions possible. We decompose Z and W in submatrices as

Z =

[Z1 Z2

−ZT2 0

],

W =

[W1 W2

−W T2 0

],

23

5. Derivatives of singular values and singular vectors

where Z1,W1 ∈ Ro×o are anti-symmetric, Z2 ∈ Ro×m−o andW2 ∈ Ro×n−o. The followingexpressions are inspired by Anderson [3], where a closed expression for the derivatives ofsingular vectors is stated. Unfortunately, only some of the derivatives shown there arecorrect, since Anderson sets Z1 = W1 = 0, which is erroneous. To see this, consider Ato be square and of full rank. In this case, Z2 and W2 vanish and the singular vectorderivatives would always be zero which is obviously wrong.We split the singular value decomposition into parts, which do contribute to A or do not:

A = [U1U2]

[Λ 00 0

] [V T

1

V T2

],

where U1 ∈ Rm×o, U2 ∈ Rm×m−o, V1 ∈ Rn×o, V2 ∈ Rn×n−o and Λ ∈ Ro×o. Now, Q isstructured as

Q =

[Q1 Q2

Q3 Q4

]=

[UT1 A

′V1 UT1 A′V2

UT2 A′V1 UT2 A

′V2

].

Rewriting (5.7) with these expressions, we immediately get ([3])

ZT2 = −UT2 A′V1Λ−1,W2 = −Λ−1UT1 A

′V2,(5.13)

andΛ′ = Q1 − Z1Λ + ΛW1. (5.14)

We introduce a new operator vec where vec(A) regroups the upper diagonal elements ofA in a vector in a row-wise manner. A simple example is shown in Appendix A.2.Now we can define z := vec(Z1) ∈ R

12o(o−1), w := vec(W1) ∈ R

12o(o−1) and y :=[

vec(Q1), vec(QT1 )]∈ Ro(o−1). Let

s1 := [σ2, . . . , σo, σ3, . . . , σo, . . . , σo−1, σo, σo] ∈ R12o(o−1),

s2 := [σ1, . . . , σ1︸ ︷︷ ︸o−1 times

, σ2, . . . , σ2︸ ︷︷ ︸o−2 times

, . . . , σo−1] ∈ R12o(o−1),

S1 := diag(s1),

S2 := diag(s2).

(5.14) can now be regrouped as a system of linear equations[S1 −S2

−S2 S1

]︸ ︷︷ ︸

=:S

[zw

]= y. (5.15)

The inverse of the matrix on the left-hand side can easily be found using techniques forthe inverse of a block matrix. (5.15) is of full rank for σi 6= σj . To see this consider

det(S) = det(S1) det(S1 − S2S−11 S2),

24

5. Derivatives of singular values and singular vectors

where det(S1) =∏oi=1 σ

i−1i 6= 0. The second determinant is also diagonal and we have

to check if zero entries on the diagonal are possible. If we examine s2 more closely, wesee that it is separated in o− 1 blocks with decreasing length. We consider block l withlength o− l. We assume the first entry of this block has index k in s2, then

(S1 − S2S−11 S2)kk = σk+1 − σk

1

σk+1σk,

which is zero only if σk = σk+1. Since the singular values are arranged in decreasingorder, this suffices to prove that (5.15) is of full rank if σi 6= σj .We now show the validity of (5.15) by proving that all equations in (5.8) and (5.9) are

incorporated in (5.15). Let p = 1, . . . , o − 1, q = 2, . . . , o, p < q, i = 1, . . . , 12o(o − 1),

j = 12o(o− 1) + 1, . . . , o(o− 1). We introduce the equalities

i =∑p−1

k=1(o− k) + q − p,j = i+ 1

2o(o− 1),(5.16)

where one can observe that Qpq = y(i) and Qqp = y(j).For given i, the elements of the i-th row of S are zeros, except for Sii and Sij . Byconstruction, (s1)i = σ1+p+q−p−1 = σq and (s2)i = σp. Therefore, the i-th row of thesystem of linear equations (5.15) is equivalent to (5.8). The same approach can be usedto prove the equivalence between the j-th row of (5.15) and (5.9) which concludes theproof.Higher derivatives of Z,W and thus higher derivatives of U, V and S can be obtained

by constructing derivatives of (5.13) and (5.15) analogously to (4.6). This will not befleshed out here, but its clear that we will have the benefits of LR-decomposition again.

5.2.3. Repeated singular values

Similar to repeated eigenvalues, the singular vectors associated to repeated singular valuesare not unique. In fact for σp = σq, any linear combination of the singular vectors up, uqis again a singular vector. As in the case of repeated eigenvalues (see for example [5]),one has to utilize information from higher derivatives of the SVD.Wright [75] already showed the derivation of Z and W in the case of one repeated

singular value pair with distinct derivatives. We will follow the derivation there, but willincorporate it in our closed form expression (5.15). Consider q = p+ 1 and σp = σq andσ′p 6= σ′q.We will not include the extension to multiple equal singular values σk = . . . = σl,k − l > 1, explicitly but as it will become clear, this extension will be a straightforwardcontinuation of our approach.All entries of Z,W except entries (p, q), (q, p) are computable. To see this, letS ∈ Ro(o−1)−2×o(o−1)−2 be the matrix S where rows i, j and columns j, i are eliminated,where the indices (i, j) are computed according to (5.16). z, w and q are constructedanalogously by erasing entries i, j. Now, by construction, the system of linear equations

S

[zw

]= q (5.17)

25

5. Derivatives of singular values and singular vectors

is of full rank. In combination with (5.13), all entries of Z,W except (p, q), (q, p) areknown. Since Q is readily computable, also S′ is known.We introduce the concept of reduced matrices, which will simplify the notation. Let

ZR be the reduced matrix of Z, where (ZR)kl = Zkl for (q, p) 6= (k, l) 6= (p, q) and(ZR)pq = (ZR)qp = 0. Now for Q,P ∈ Rm×m

(ZQ)pq = (ZRQ)pq + ZpqQqq,

(QZ)pq = (QZR)pq − ZpqQpq,(PZQ)pq = (PZRQ)pq − PpqZpqQpq + PppZpqQqq,

holds.If σp = σq, then (5.8) and (5.9) do not include sufficient information to determine

Zpq,Wpq uniquely. [75] calculates the respective derivatives and adds (5.8)′ to (5.9)′ toobtain a second equation. With the use of reduced matrices and

Q′ = UTA′′V − ZQ+QW, (5.18)

we obtain

2(σ′q − σ′p)Zpq + 2(σ′q − σ′p)Wpq = (UTA′′V )pq + (UTA′′V )qp

−(ZRQ)pq − (ZRQ)qp

+(QWR)pq + (QWR)qp. (5.19)

(5.8) (or (5.9) which contains the same information) and (5.19) determine Zpq and Wpq

uniquely and the first derivatives of U and V can be calculated.In the case of σk = . . . = σl, k− l > 1, we have to eliminate additional rows and columnsin (5.17) and consequently consider more equations similar to (5.19) and (5.8).To our knowledge, there is no existing procedure in literature which deals with the case

σp = σq, σ′p = σ′q and σ′′p 6= σ′′q . [75] addresses this issue briefly as being ’rather long’.The derivation, which is indeed complicated and technical, is presented in Appendix A.3.We show here the resulting system of linear equations. As before, we assume Zkl, Wkl,(k, l) 6= (p, q) are already computed by (5.17) and (5.13) and S′ is computed from Q.Zpq, Wpq can be calculated by solving

σp −σq 0T

l1 + l2 + l3,z + l4,z l1 + l2 + l3,w + l4,w vlM S

ZpqWpq

z′

w′

=

Qpqr2 + r3 + r4

r5

(5.20)

26

5. Derivatives of singular values and singular vectors

with

l1 = P ′′qq − P ′′pp − (ZRQ)qq + (ZRQ)pp + (QWR)qq − (QWR)pp,

l2 = 2(P ′′qq − P ′′pp),r2 = P ′′′pq + P ′′′qp − 2(ZRP

′′)pq + 2(P ′′WR)pq − 2(ZRP′′)qp + 2(P ′′WR)qp,

l3,z = −(ZRQ)qq + (ZRQ)pp + 2(QWR)qq − 2(QWR)pp,

l3,w = −2(ZRQ)qq + 2(ZRQ)pp + (QWR)qq − (QWR)pp,

r3 = (ZRZRQ)pq + (ZRZRQ)qp + (QWRWR)pq + (QWRWR)qp

−2 ((ZRQWR)pq + (ZRQWR)qp) ,

l4,z =1

σp(Q2Q

T2 )qq −

1

σq(Q2Q

T2 )pp,

l4,w =1

σp(QT3 Q3)qq −

1

σq(QT3 Q3)pp,

r4 =(

Λ−1(

Λ′Z2 − (WR)1QT3 −W2Q

T4 + P ′′

T3 +QT1 Z2

)·Q3

)pq

+ (. . .)qp

+(Q2[W T2 Λ +QT2 (ZR)1 +QT4 Z

T2 − P ′′

T2 −W T

2 QT1 ]Λ−1)pq + (. . .)qp ,

H = P ′′1 − Z1,RQ1 − Z2Q3 +Q1W1,R −Q2WT2 ,

h =

[vec(H)

vec(HT )

],

r5 = h− S′[zw

],

where, for ease of notation, the term (. . .) always contains the same components as theprevious bracketed expression. We used P as

P ′ := UTA′V = Q,

P ′′ := UTA′′V,

P ′′ =

[P ′′1 P ′′2P ′′3 P ′′4

]=

[UT1 A

′′V1 UT1 A′′V2

UT2 A′′V1 UT2 A

′′V2

],

and vl ∈ R1×o(o−1)−2, M ∈ Ro(o−1)−2×2 are calculated by Algorithms 2 and 3 shown inAppendix A.3, respectively. Note that solving (5.20) also provides all entries of Z ′ andW ′, except the entries (p, q), (q, p) . This would come in handy for the calculation ofhigher derivatives of the singular vectors but we will focus on the first derivatives. Asmall numerical example to illustrate the validity of (5.20) is shown in the next section.

27

5. Derivatives of singular values and singular vectors

5.2.4. Example of repeated singular values with repeated derivatives

We present an example of our calculation of singular value and vector derivatives in thecase of σp = σq, σ′p = σ′q, σ′′p 6= σ′′q . Let

U :=1√3

cos(x) 1 sin(x) −1− sin(x) −1 cos(x) −1

1 − sin(x) 1 cos(x)−1 cos(x) 1 sin(x)

,

V :=

cos(x) − sin(x) 0sin(x) cos(x) 0

0 0 1

,S := diag

(ex, ln(x+ 1) + 1, ex+1

),

set A := USV T . This is a modified example taken from [5]. U and V are orthonormaland A has two equal singular values and singular value derivatives for x = 0, we denotep = 1, q = 2, e.g. we have i = 1, j = 4. We can derive Z and W and their respectivederivatives from these analytic expressions, with Z1,2 = −1/3, W1,2 = −1.With (5.13), we obtain Z2 =

[−1

3 ,13 ,

13

]and W2 = ∅. The system of linear equations

(5.17) reads asσ3 0 −σ1 00 σ3 0 −σ2

−σ1 0 σ3 00 −σ2 0 σ3

Z1,3

Z2,3

W1,3

W2,3

=

Q1,3

Q2,3

Q3,1

Q3,2

with the result

Z1,3

Z2,3

W1,3

W2,3

=

1/31/300

.With these elements, the reduced matrices have the shape

ZR =

0 0 1/3 −1/30 0 1/3 1/3−1/3 −1/3 0 1/31/3 −1/3 −1/3 0

, WR =

0 0 00 0 00 0 0

.We state now the elements of (5.20):

l1 = −2 , l2 = −4,l3,z = l3,w = 0 , l4,z = l4,w = 0,r2 = 8 , r3 = 0,

r4 = −1/9 , r5 =

2e/97e/9

0−1

−e/3e/3−1/3−1/3

,M and vl are calculated through Algorithms 2 and 3 as

M =

e/3 0−e/3 0

0 −1/30 1/3

, vl =

−1/3−1/3e/3e/3

T

.

28

5. Derivatives of singular values and singular vectors

With these elements, we can solve Equation 5.20 and obtain

[Z1,2

W1,2

]=

[−1/3−1

],

[z′

w′

]=

0

1/300

which are indeed the desired missing elements of Z andW as established at the beginningof this section. The correctness of the elements z′ and w′ can easily be checked with thegiven the analytic singular values and singular vectors, which concludes the example.

29

6. Derivatives of 3D MUSIC

With the algorithms provided in Chapters 4 and 5 we are now able to differentiate theMUSIC cost function with systematic errors (3.7). We will need these derivatives inthe analysis of the asymptotic behavior of the estimation error in Chapter 7. To ourknowledge, there are no derivatives mentioned in the huge amount of literature andproposed algorithms. These might be of interest in themselves, since exact rather thanapproximate knowledge of the derivatives has definite advantages for solving the inverseoptimization problem. We recall the perturbed MUSIC projection function

F (x, h) = UT (x) Π(h)U(x). (6.1)

and the cost function (3.7)

f(x, h) = λmax(UT (x) Π(h)U(x)

)=: λmax (F (x, h)) . (6.2)

We calculate the required derivatives of the maximum eigenvalue through the algorithmpresented in Chapter 4, but since λmax in (6.1) is unique by assumption, we are able todescribe the derivative of (6.1) with respect to hi in closed form as (see [44])

∂

∂hif(x, h) = uTmax

∂

∂hi[F (x, h)]umax,

where umax(x, h) is the normalized eigenvector corresponding to λmax(F ). This allowsus to describe higher order derivatives of f in also closed form as opposed to a linearsystem. First and second order derivatives of the MUSIC projector Π are calculated in[73], Appendix B. To abbreviate notation, we write ∂

∂hiK = Khi and

∂∂xiK = Kxi for

some K. We set yhi := Ahix, yhihj := Ahihjx and Π⊥ := I −Π and get

∂

∂hiΠ =

(Π⊥yhiy

+)

+ (. . .)T ,

∂

∂hihjΠ =

(−Πhjyhiy

+ + Π⊥yhihjy+ + Π⊥yhi(y

T y)−1yThjΠ⊥ −Π⊥yhiy

+yhjy+)

+(. . .)T .

To get a feeling of the characteristics of the derivatives of (6.2) we show some of them.Extensions to higher derivatives should be straightforward:Derivatives w.r.t. h:

∂

∂hif(x, h) = uTmaxU

TΠhiUumax,

∂

∂hihjf(x, h) = (umax)ThjU

TΠhiUumax + uTmaxUTΠhihjUumax +

uTmaxUTΠhiU(umax)hj .

30

6. Derivatives of 3D MUSIC

Derivatives w.r.t. x:

∂

∂xif(x, h) = uTmax

(UTxiΠU + UTΠUxi

)umax,

∂

∂xixjf(x, h) = (umax)Txj

(UTxiΠU + UTΠUxi

)umax

+(umax)T(UTxiΠU + UTΠUxi

)xjumax

+(umax)T(UTxiΠU + UTΠUxi

)(umax)xj .

Mixed derivatives:

∂

∂hixjf(x, h) = (umax)TxjU

TΠhiUumax + uTmaxUTxjΠhiUumax

+uTmaxUTΠhiUxjumax + (umax)TUTΠhiU(umax)xj ,

Note that, despite the different appearance, we have f(x, h)xihj = f(x, h)hjxi .

31

7. Expectation of estimation error

7.1. Introduction

The main goal of this work is to establish an expression of the bias of the MUSIC costfunction in the presence of modeling errors. In Section 3.3 we established the perturbed3D MUSIC cost function

f(x, h) = λmax(UT (x) Π(h)U(x)

). (7.1)

The derivatives of f w.r.t. x and h up to arbitrary order are treated in Chapter 6. Severalauthors have considered error estimates for the distance between the true source positionx and the estimated perturbed position x due to modeling errors in the case of scalarx, in which (7.1) takes the form (3.3). We assume the asymptotic case, in which theprojection matrix Π(h) is fully known. Generally, the perturbation h is not known in anapplication. To achieve results, it is treated as a random variable and the expectation orhigher order moments of the distance estimate are calculated.The existing approaches vary in the modeling of h and the order of the utilized meth-

ods. [35] examined the effects of finite sampling which we do not address in this work.Swindlehurst [68] and Friedlander [25] used infinite sampling and a first order approachfor both position and modeling error. As a result of the first order modeling error, theMUSIC cost function was found to be unbiased. Ferréol et al. [21] expanded this ap-proach to a first order position error with a second order treatment of the modeling error,which resulted in a biased estimator even for zero-mean h. Their results are expandedin [22] to the probability of the proper resolution of two distinct sources.To our knowledge, no author has treated fully 3D problems yet. We will use the

approach in [21], but will treat the modeling error as general as possible. Furthermore,we propose a fixed-point iteration scheme to augment the position error to potentiallysecond order. Sections 7.2.1 and 7.2.2 will recap and simplify the approach used byFerréol. Section 7.2.3 gives a method to generate a second order position error. We willadapt the methods from these Sections to 3D problems in the remaining Sections of thischapter.

7.2. Scalar valued x

7.2.1. Estimation error as ratio of quadratic forms

In this section, we consider scalar x and h ∈ Rm to clarify the ideas used in our ap-proach. We will basically rely on several Taylor expansions to create an approximation

32

7. Expectation of estimation error

of the estimation error as a ratio of quadratic forms. The derivation is in parallel to thederivation shown in [21]. In Section 7.3, we will expand this to vector-valued x and h.This does not change the theoretical approach significantly but will increase the size ofthe involved matrices and therefore the computational load drastically. This will be aproblem which has to be considered separately (see Chapter 8).We want to derive an estimate for the estimation error x − x. Let fx denote the

derivative of the MUSIC cost function f(x, h) w.r.t. x ∈ R. With the assumptionfxx(x, h) 6= 0∀x, h ∈ R, we can use first-order Taylor expansion of fx(x, h) with respectto x at x

0 = fx(x, h)

≈ fx(x, h) + fxx(x, h)(x− x),

x− x ≈ fx(x, h)

fxx(x, h). (7.2)

(7.2) is still nonlinearly dependent on h. To overcome this, we use second order Taylorexpansion with respect to h at 0 to form quadratic forms of fx and fxx:

fx(x, h) ≈ fx(x, 0) + f ′x(x, 0)h+ 0.5f ′′x (x, 0)h2

=

[1h

]T [fx(x, 0) f ′x(x, 0)